What is Apache Kafka?

Apache Kafka is an open-source distributed event streaming platform that is designed to handle massive amounts of data in real-time. It was initially developed by LinkedIn and later became a part of the Apache Software Foundation. Kafka is a horizontally scalable platform that enables real-time processing of data streams and provides a message queue-like system for exchanging messages between applications.

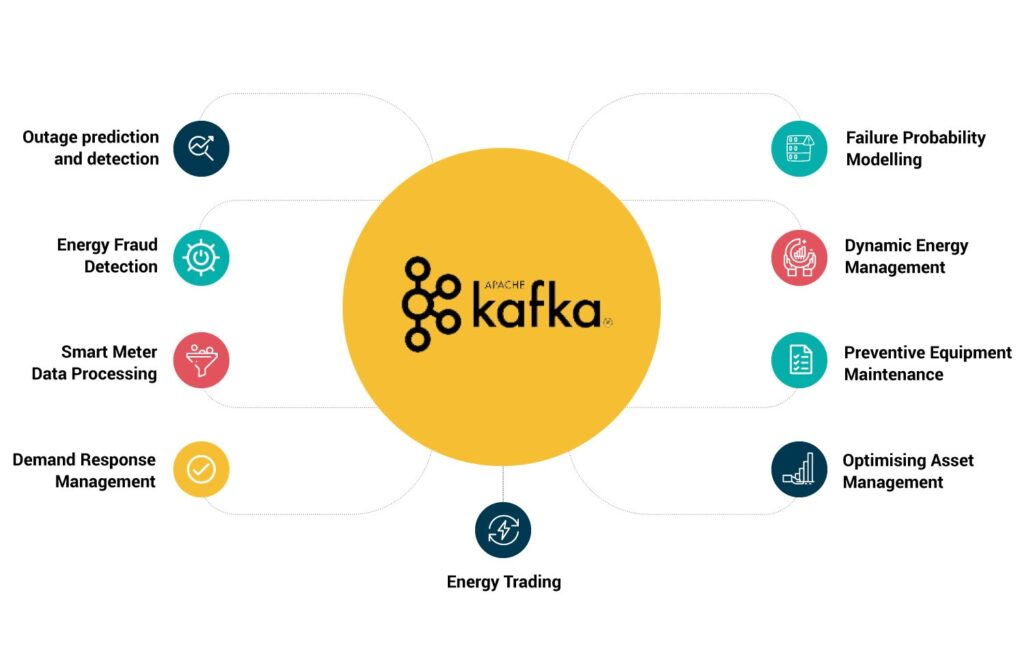

Top 10 Use Cases of Apache Kafka

- Real-time stream processing: Kafka can be used to process real-time data streams from various sources, such as social media, IoT devices, and web applications.

- Log aggregation: Kafka can aggregate logs from various servers and applications, providing a centralized platform for log analysis and troubleshooting.

- Messaging: Kafka can act as a messaging system for exchanging messages between applications in a distributed environment.

- Data integration: Kafka can be used for data integration between various systems and applications, enabling seamless data flow between them.

- Microservices: Kafka can be used as a communication layer between microservices in a distributed architecture.

- Event sourcing: Kafka can be used for event sourcing, which is a pattern used to capture all changes to an application’s state as a sequence of events.

- Real-time analytics: Kafka can be used to feed real-time data streams to analytics platforms, enabling real-time insights and decision-making.

- IoT data processing: Kafka can handle massive amounts of IoT data, enabling real-time processing and analysis of sensor data.

- Fraud detection: Kafka can be used for fraud detection by processing real-time data streams and detecting anomalies in them.

- Machine learning: Kafka can be used for real-time data processing in machine learning applications, enabling real-time model training and prediction.

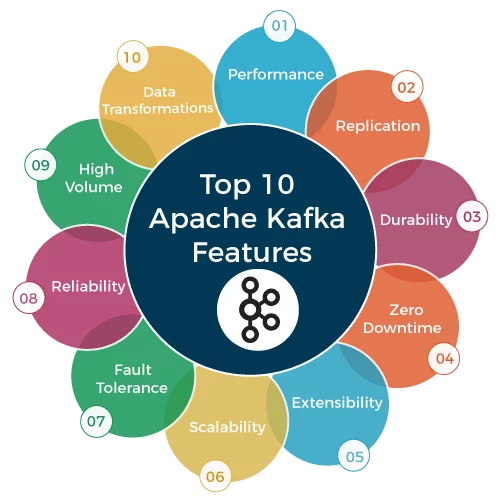

Features of Apache Kafka

- Distributed: Kafka is designed to be a distributed platform, enabling horizontal scalability and fault tolerance.

- High-throughput: Kafka can handle millions of messages per second, making it suitable for high-traffic applications.

- Low-latency: Kafka provides low-latency data processing, enabling real-time data streaming and analysis.

- Flexible: Kafka supports various message formats, including text, binary, and JSON, making it suitable for various use cases.

- Scalable: Kafka can be scaled horizontally by adding more nodes to the cluster, enabling seamless scalability.

- Reliable: Kafka provides reliable message delivery by storing messages on disk and replicating them across nodes in the cluster.

- Fault-tolerant: Kafka is designed to handle node failures and provide seamless failover, ensuring high availability of data streams.

How Apache Kafka works and Architecture?

Kafka follows a distributed publish-subscribe messaging model. Its core components are:

Topic: A category or feed name to which records (messages) are published.

Broker: A single Kafka server that stores and manages the topics and their partitions.

Partition: Each topic is divided into one or more partitions, allowing for parallelism and distribution of data.

Producer: A process that publishes messages to a Kafka topic.

Consumer: A process that subscribes to one or more topics and reads messages published to them.

Consumer Group: A group of consumers sharing the same group id for parallel consumption of a topic.

Kafka brokers form a cluster, and topics are divided into partitions across the brokers. Producers write messages to topics, and consumers read from topics. Kafka retains messages for a configurable retention period, allowing consumers to replay past events.

How to Install Apache Kafka

Installing Apache Kafka is a straightforward process. Here are the basic steps to install Kafka on a Linux system:

- Download the Kafka binaries from the Apache Kafka website.

- Extract the downloaded archive to a directory of your choice.

- Set the KAFKA_HOME environment variable to the Kafka installation directory.

- Start the Kafka server by running the following command:

bin/kafka-server-start.sh config/server.properties

Once the server is started, you can use the Kafka command-line tools to create topics, publish and consume messages, and monitor the Kafka cluster.

Basic Tutorials of Apache Kafka: Getting Started

To get started with Apache Kafka, you can follow these basic tutorials:

Installing Apache Kafka

Before you can start using Apache Kafka, you need to install it on your system. Follow these steps to install Kafka:

- Download Apache Kafka: Visit the official Apache Kafka website and download the latest stable release.

- Extract the archive: Unzip the downloaded file to a directory of your choice.

- Configure ZooKeeper: Kafka uses ZooKeeper for managing its cluster. Configure ZooKeeper properties.

- Start ZooKeeper: Start the ZooKeeper server.

- Configure Kafka: Update the Kafka server properties, including broker and log settings.

- Start Kafka Brokers: Start one or more Kafka broker instances.

- Verify the installation: Run a simple Kafka command to ensure Kafka is up and running.

Kafka Core Concepts

To effectively use Kafka, you must understand its core concepts. Familiarize yourself with the following terms:

- Topic: A category or feed name to which messages are published.

- Partition: Each topic is divided into one or more partitions, allowing parallelism and distribution of data.

- Broker: A single Kafka server, part of the Kafka cluster.

- Producer: A process that publishes messages to a Kafka topic.

- Consumer: A process that subscribes to one or more topics and reads messages published to them.

- Consumer Group: A group of consumers sharing the same group id for parallel consumption of a topic.

- Offset: A unique identifier representing the position of a message in a partition.

Kafka Producer

Learn how to set up a Kafka producer to publish messages to a topic:

- Create a Kafka producer configuration: Define properties like the broker address and serialization settings.

- Create a Kafka producer instance: Create a KafkaProducer object with the configured properties.

- Send messages: Use the send() method to publish messages to a specific topic.

Kafka Consumer

Set up a Kafka consumer to read messages from one or more topics:

- Create a Kafka consumer configuration: Define properties like the broker address and deserialization settings.

- Create a Kafka consumer instance: Create a KafkaConsumer object with the configured properties.

- Subscribe to topics: Use the subscribe() method to subscribe to one or more topics.

- Poll for messages: Continuously poll for new messages using the poll() method.

Kafka Message Serialization

Understand how to serialize and deserialize messages in Kafka:

- Message Serialization: Convert messages from their native format to a byte array before sending.

- Message Deserialization: Convert received byte arrays back to their original format for consumption.

Kafka Producers with Partitions and Replicas

Explore how Kafka handles data distribution and fault tolerance through partitions and replicas:

- Understanding Partitions: Learn about the role of partitions in distributing data across brokers.

- Replication Factor: Configure replication to ensure data durability and fault tolerance.

Kafka Consumer Groups and Offset Management

Learn how Kafka supports parallelism and scalability through consumer groups:

- Consumer Group Concepts: Understand how consumers with the same group id form a consumer group.

- Offset Management: Learn about offset management to keep track of consumed messages.

By following these tutorials, you can get a basic understanding of how Kafka works and start building your own Kafka-based applications.

- Why Can’t I Make Create A New Folder on External Drive on Mac – Solved - April 28, 2024

- Tips on How to Become a DevOps Engineer - April 28, 2024

- Computer Programming Education Requirements – What You Need to Know - April 28, 2024