Have you ever heard of the vanishing gradient problem? If not, then you are in for a treat! In this article, we will delve into the depths of this infamous issue that has plagued the world of deep learning.

Introduction

Before we dive into the vanishing gradient problem, let’s first understand what deep learning is. In a nutshell, deep learning is a subset of machine learning that involves training artificial neural networks to perform various tasks. These tasks can range from image recognition to natural language processing.

The Vanishing Gradient Problem Explained

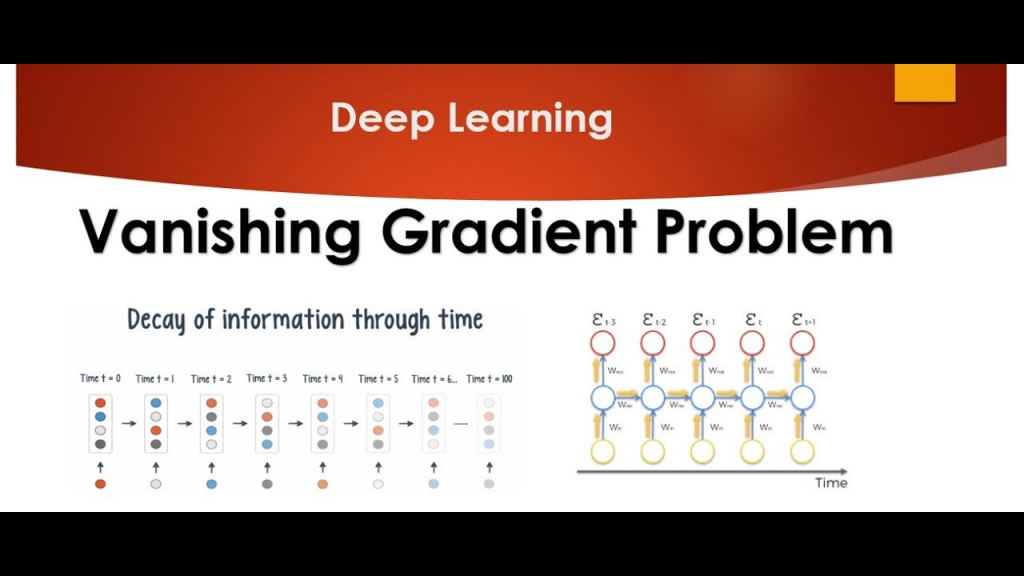

Now, let’s talk about the vanishing gradient problem. The vanishing gradient problem occurs when the gradients in a deep neural network become extremely small, making it difficult for the network to learn. In other words, as the network becomes deeper, the gradients tend to vanish, making it difficult for the network to adjust its weights.

Why Does the Vanishing Gradient Problem Occur?

The vanishing gradient problem occurs due to the nature of the activation functions used in deep neural networks. Activation functions are used to introduce non-linearity into the network, allowing it to learn more complex functions. However, some activation functions, such as the sigmoid function, have a derivative that is close to zero. As a result, when the network becomes deeper, the gradients become smaller and smaller, ultimately leading to the vanishing gradient problem.

The Effects of the Vanishing Gradient Problem

The vanishing gradient problem can have severe consequences on the performance of a deep neural network. When the gradients become smaller and smaller, the network becomes increasingly difficult to train. This can result in longer training times, and in some cases, the network may not converge at all.

Solutions to the Vanishing Gradient Problem

Fortunately, there are several solutions to the vanishing gradient problem. One solution is to use activation functions that have a derivative that is not close to zero, such as the Rectified Linear Unit (ReLU) function. Another solution is to use normalization techniques, such as Batch Normalization, which can help stabilize the gradients during training.

Conclusion

In conclusion, the vanishing gradient problem is a significant issue that has plagued the world of deep learning. It occurs when the gradients in a deep neural network become extremely small, making it difficult for the network to learn. However, with the use of proper activation functions and normalization techniques, the vanishing gradient problem can be overcome.

- Why Can’t I Make Create A New Folder on External Drive on Mac – Solved - April 28, 2024

- Tips on How to Become a DevOps Engineer - April 28, 2024

- Computer Programming Education Requirements – What You Need to Know - April 28, 2024