What is Docker?

Docker is a set of platform for developing, shipping, and running applications in containers. Containers are lightweight, portable, and self-sufficient units that can run applications and their dependencies, ensuring consistency across different environments. Docker provides a standardized way to package, distribute, and deploy software, making it easier to build, ship, and run applications reliably across various computing environments.

Key Concepts in Docker:

- Containerization: Docker containers encapsulate an application and its dependencies, including libraries, runtime, and system tools, into a single package. This package can run consistently on any environment that supports Docker.

- Images: Docker images are lightweight, standalone, and executable packages that include everything needed to run a piece of software, including the code, runtime, libraries, and system tools.

- Dockerfile: A Dockerfile is a script that carries instructions for building a Docker image. It specifies the base image, adds dependencies, and defines the steps to create the final container.

- Registry: Docker images can be deposited and shared through Docker registries. Docker Hub is a public registry, and organizations often use private registries to manage and distribute their custom images.

- Container Orchestration: Docker can be integrated with orchestration tools like Kubernetes to manage and scale containerized applications in production environments.

What is top use cases of Docker?

Top Use Cases of Docker:

- Application Packaging and Deployment:

- Docker simplifies the packaging of applications and their dependencies into containers, ensuring consistency between development and production environments.

- Applications packaged in Docker containers can be easily deployed on various platforms without worrying about differences in the underlying infrastructure.

- Microservices Architecture:

- Docker is well-suited for microservices-based architectures where applications are broken down into smaller, independent services.

- Each microservice can be packaged and deployed as a separate container, allowing for scalability, flexibility, and easier maintenance.

- DevOps and Continuous Integration/Continuous Deployment (CI/CD):

- Docker facilitates CI/CD pipelines by providing a consistent environment for building, testing, and deploying applications.

- Developers can use the same Docker image in development and production, reducing the chances of “it works on my machine” issues.

- Isolation and Resource Efficiency:

- Containers provide process and resource isolation, allowing multiple containers to run on the same host without interfering with each other.

- Docker enables efficient resource utilization as containers share the host OS kernel while providing isolation similar to virtual machines.

- Hybrid and Multi-Cloud Deployments:

- Docker containers are portable, making it easy to run applications across different cloud providers or on-premises infrastructure.

- Organizations can adopt a hybrid or multi-cloud strategy with confidence, knowing that their applications packaged in Docker containers can run consistently across environments.

- Scalability and Load Balancing:

- Docker enables easy scaling of applications by creating multiple instances of containers to handle increased load.

- Container orchestration tools, such as Kubernetes, can automate the deployment, scaling, and management of containerized applications.

- Testing and QA:

- Docker containers provide a consistent testing environment, allowing developers and QA teams to test applications in an environment that closely mirrors production.

- Test environments can be created on-demand using Docker, improving efficiency and reducing the time needed for testing.

- Legacy Application Modernization:

- Docker can be used to containerize and modernize legacy applications, making them more portable, scalable, and easier to manage.

Overall, Docker has become a fundamental tool in modern software development and deployment, offering benefits in terms of agility, consistency, and efficiency across the entire software development lifecycle.

What are feature of Docker?

Features of Docker:

- Containerization: Docker uses container technology to encapsulate applications and their dependencies, ensuring consistency across different environments.

- Portability: Docker containers can run on any system that supports Docker, providing a consistent environment from development to production.

- Efficiency: Containers share the host OS kernel, making them lightweight and enabling efficient resource utilization compared to traditional virtualization.

- Isolation: Containers provide process and resource isolation, allowing multiple containers to run on the same host without interfering with each other.

- Versioning and Rollback: Docker images can be versioned, and rollbacks to previous versions are easily achieved, improving the manageability of applications.

- Docker Hub: Docker Hub is a public registry where users can find and share Docker images. It serves as a central repository for container images.

- Docker Compose: Docker Compose is a tool for defining and running multi-container Docker applications. It allows the definition of multi-container environments using a YAML file.

- Dockerfile: Dockerfiles are used to create Docker images. They contain a set of instructions for building a Docker image, specifying the base image, adding dependencies, and defining the application’s setup.

- Networking: Docker provides networking capabilities to enable communication between containers and with the external world. Docker also supports user-defined networks for better control over container communication.

- Orchestration: Docker can be integrated with orchestration tools like Kubernetes or Docker Swarm to manage and scale containerized applications in production environments.

- Security: Docker containers are designed with security in mind, with features like namespace isolation, control groups, and the ability to limit resource usage.

- Logging and Monitoring: Docker provides logging capabilities, and it can be integrated with various monitoring tools to track the performance and health of containers and applications.

What is the workflow of Docker?

Workflow of Docker:

The typical workflow of Docker involves several steps, from creating a Docker image to deploying and managing containers. Here’s a simplified overview:

- Write Code:

- Develop your application code and any necessary dependencies.

2. Dockerize Application:

- Create a Dockerfile that describes how to build your application into a Docker image.

- Define the base image, add dependencies, copy files, and set up the application.

3. Build Docker Image:

- Use the Docker CLI or a Dockerfile to build a Docker image from your application code.

docker build -t your-image-name:tag .4. Run Container Locally:

- Run a container locally to test your application in a Docker environment.

docker run -p 8080:80 your-image-name:tag5. Push to Docker Hub (Optional):

- Optionally, push your Docker image to Docker Hub or another container registry for sharing and deployment.

docker push your-image-name:tag

6. Orchestrate (Optional):

- If deploying in a production environment, use an orchestration tool like Docker Swarm or Kubernetes to manage and scale your containers.

7. Deploy on Production:

- Deploy your Docker image on production servers or cloud infrastructure.

8. Monitor and Scale (Optional):

- Monitor the performance and health of your containers.

- Scale your application by creating additional instances of containers as needed.

9. Update and Rollback:

- Update your application by creating a new version of the Docker image.

- Optionally, rollback to a previous version if issues arise.

This workflow allows developers to create, test, and deploy applications consistently across different environments, promoting a more streamlined and reliable software development and deployment process.

How Docker Works & Architecture?

Docker is a platform that enables developers to package applications into standardized units called containers. These containers include all the necessary dependencies and libraries, allowing them to run consistently across different environments.

Following is an overview of how Docker works and its architecture:

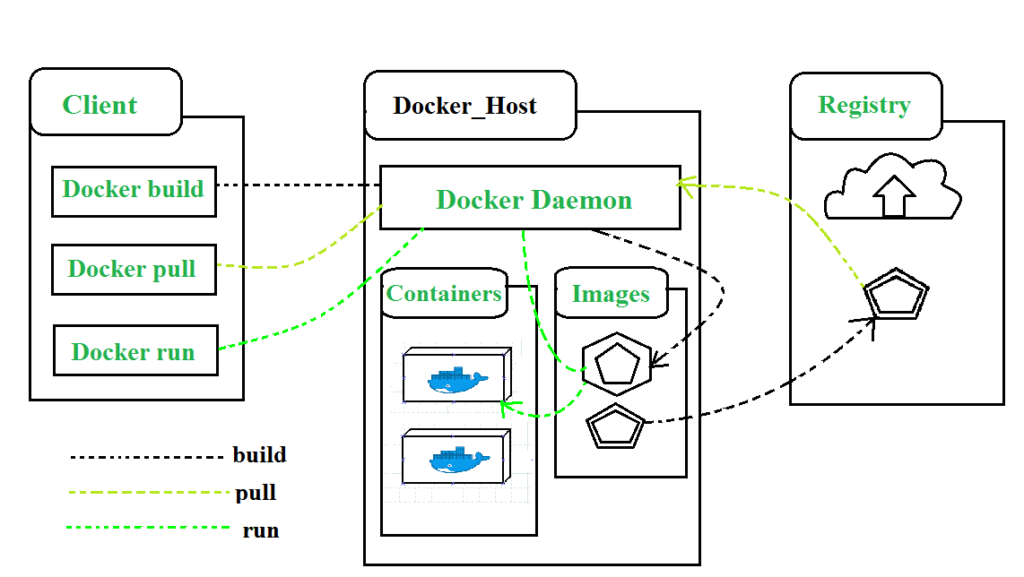

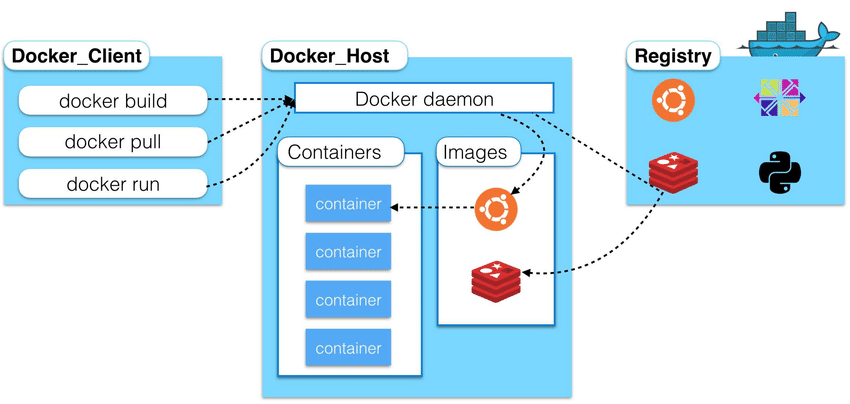

1. Client-Server Architecture:

Docker uses a client-server architecture. The Docker client discloses with the Docker daemon through a REST API. The daemon is responsible for managing images, building and running containers, and managing networks and storage.

2. Container Images:

Container images are the building blocks of Docker. They are static, read-only files that contain the application, its dependencies, and a minimal operating system. Images can be shared and reused easily, promoting consistency and portability.

3. Containerization Process:

- Building an Image: Developers write their code and create a Dockerfile, which specifies the instructions for building the image. The Docker daemon reads the Dockerfile and builds the image layer-by-layer.

- Running a Container: Once an image is built, it can be used to run containers. The Docker daemon creates a container instance from the image and starts the application.

- Sharing Images: Images can be shared publicly on Docker Hub or privately within a team or organization. This allows for easy collaboration and reuse of code.

4. Docker Architecture Components:

- Docker Client: The command-line tool used to interact with the Docker daemon.

- Docker Daemon: The server-side component that manages images, builds and runs containers, and manages networks and storage.

- Docker Registry: A service for storing and sharing images. Docker Hub is the public registry, but private registries can be used for internal sharing.

- Dockerfile: A text file that carries instructions for building a Docker image.

- Container: A running instance of an image. Each container has its own isolated file system, process space, and network interfaces.

5. Benefits of Docker:

- Portability: Docker containers run consistently across different environments, regardless of the underlying operating system.

- Isolation: Each container is isolated from other containers, ensuring that applications don’t interfere with each other.

- Scalability: Containers are lightweight and can be started and stopped quickly, making them ideal for scaling applications.

- Reproducibility: Docker ensures that applications run the same way in every environment, promoting consistency and reproducibility.

- Automation: Docker can be easily integrated into CI/CD pipelines for automated builds and deployments.

By understanding how Docker works and its architecture, you can gain the knowledge and skills necessary to leverage its benefits and build modern, portable, and scalable applications.

How to Install and Configure Docker?

Installing and Configuring Docker

Following is a step-by-step guide on how to install and configure Docker:

1. Check System Compatibility:

Before installing Docker, ensure your system meets the minimum requirements:

- Operating System:

- Linux: Latest stable version of Ubuntu, Debian, CentOS, Fedora, etc.

- Windows: Windows 10 64-bit Pro, Enterprise, or Education

- macOS: macOS 10.14 or later

- Hardware:

- CPU: x86-64 architecture

- Memory: 4GB RAM (minimum), 8GB+ recommended

- Disk space: 2GB+ free space

2. Install Docker Engine:

Follow the official installation instructions for your operating system:

- Linux: Install linux from the docker official website

- Windows: Install Windows from the docker official website

- macOS: Install macOS from the docker official website

3. Verify Docker Installation:

After installation, run the following command to verify Docker is running:

docker --version

This should print the installed Docker version number.

4. Configure Docker Daemon (Optional):

The Docker daemon comes with default settings, but you can customize them in the configuration file (usually located at /etc/docker/daemon.json). This allows you to configure:

- Network: Specify network settings like bridge IP address or port range.

- Storage: Configure storage options like volume drivers and data paths.

- Security: Enable security features like user namespaces or SELinux.

5. Learn Basic Commands:

Start exploring basic Docker commands to manage images and containers:

docker pull: Downloads an image from a registry.docker build: Builds an image from a Dockerfile.docker run: Generates and runs a container from an image.docker ps: Lists running containers.docker stop: Stops a running container.docker rm: Removes a container.

6. Explore Docker Hub:

Docker Hub is a public registry where you can find pre-built images for various applications and tools. You can search, download, and use these images easily.

7. Start Building with Docker:

Once you feel comfortable with the basics, start building your own projects with Docker. Utilize Dockerfiles to define your development environment and create portable images for your applications.

Important Tips:

- Consider using Docker Compose for managing multi-container applications.

- Explore Docker volumes for persistent data storage.

- Experiment with different Docker networking options.

- Join the Docker community for support and learning opportunities.

By following these steps and exploring the provided resources, you can successfully install, configure, and start using Docker to build and deploy your applications effectively. Remember, practice and continuous learning are key to mastering this powerful technology.

Fundamental Tutorials of Docker: Getting started Step by Step

Following is a breakdown of step-by-step basic tutorials to get you started with Docker:

1. Introduction to Docker:

- What is Docker? Understand the basic concept of containers and how Docker helps with containerization.

- Benefits of Docker: Explore the advantages of using Docker like portability, scalability, and reproducibility.

- Docker Architecture: Learn about the key components of Docker, including the Docker client, daemon, images, and containers.

2. Installing Docker:

- System Requirements: Check if your system meets the minimum requirements for running Docker.

- Install Docker Engine: Follow the official installation guide based on your operating system (Linux, Windows, or macOS).

- Verify Installation: Run the

docker --versioncommand to ensure Docker is installed and running correctly.

3. Basic Docker Commands:

- Pulling Images: Use

docker pullto download pre-built images from Docker Hub (e.g.,docker pull ubuntu). - Building Images: Create your own images from Dockerfiles using

docker build(e.g.,docker build -t my-image .). - Running Containers: Start a container from an image with

docker run(e.g.,docker run -it ubuntu bash). - Managing Containers: List running containers (

docker ps), stop containers (docker stop), remove containers (docker rm), and more.

4. Building a Simple Application:

- Create a Dockerfile: Write a Dockerfile specifying the steps to build your application image (e.g., install dependencies, copy code, define entrypoint).

- Build and Run the Image: Use

docker buildanddocker runto build the image from your Dockerfile and run the application within a container. - Understanding Dockerfile: Learn about various Dockerfile instructions like

FROM,WORKDIR,RUN,COPY,CMD, andEXPOSE.

5. Docker Hub and Sharing Images:

- Explore Docker Hub: Learn about Docker Hub, a public registry for sharing and finding pre-built Docker images.

- Search for Images: Use the Docker Hub website or the

docker searchcommand to discover images for specific applications or tools. - Sharing Your Own Images: Push your custom images to Docker Hub for collaboration and use on other systems.

6. Docker Networking:

- Understanding Docker Networks: Learn about Docker networks and how containers can communicate with each other.

- Creating and Connecting Networks: Create custom networks with

docker network createand connect containers to them. - Inter-container Communication: Use the

docker execcommand to access and manage containers running on the same network.

7. Volumes and Persistent Data:

- Understanding Docker Volumes: Learn about Docker volumes for persisting data beyond container lifecycles.

- Creating and Mounting Volumes: Create volumes with

docker volume createand mount them to containers for persisting data. - Sharing Data between Containers: Use volumes to share data between different containers running the same application.

8. Docker Compose and Orchestration:

- Introduction to Docker Compose: Learn about Docker Compose, a tool for defining and running multi-container applications.

- Creating a Docker Compose File: Define your application’s composition with services, volumes, networks, and other configurations.

- Running a Multi-container Application: Use

docker-compose upto start and manage all containers defined in your Compose file.

9. Exploring Further:

- Learn about Docker Swarm and Kubernetes for managing large-scale container deployments.

- Utilize Docker for CI/CD pipelines to automate builds, deployments, and testing.

- Discover best practices for securing your Docker environment.

- Join the Docker community for support, tutorials, and learning opportunities.

These step-by-step tutorials provide a solid foundation for mastering Docker basics. By practicing, experimenting, and exploring further resources, you can become proficient in using Docker to build, deploy, and manage modern applications.

- Buy TikTok Followers: In the Sense of Advertising - May 25, 2024

- Understanding the Key Principles of PhoneTrackers - May 23, 2024

- Mutual of Omaha: Selection and Interview process, Questions/Answers - April 15, 2024