What is Kubernetes ?

Kubernetes is an open-source container orchestration platform planned to automate the deployment, scaling, and management of containerized applications. It was originally evolved by Google and is now maintained by the Cloud Native Computing Foundation (CNCF). Kubernetes simplifies the process of deploying and managing containerized applications, offering tools for automating various aspects of application lifecycle management.

What is top use cases of Kubernetes?

Top Use Cases of Kubernetes:

- Container Orchestration:

- Kubernetes excels at orchestrating containers, providing a unified platform for deploying, managing, and scaling containerized applications. It automates tasks such as container deployment, scaling, and load balancing, making it easier to manage complex microservices architectures.

- Microservices Architecture:

- Kubernetes is well-suited for managing microservices-based applications. It enables the deployment of microservices as containers, manages their lifecycle, and facilitates communication between different services, promoting modularity and scalability.

- Application Scaling:

- Kubernetes allows applications to scale horizontally by adding or removing instances of containers based on demand. This autoscaling capability ensures that applications can handle varying levels of traffic efficiently.

- Service Discovery and Load Balancing:

- Kubernetes provides built-in service discovery and load balancing. It automatically assigns a stable IP address and DNS name to services, enabling other components to discover and communicate with them. Load balancing ensures even distribution of traffic across service instances.

- Rolling Deployments and Rollbacks:

- Kubernetes supports rolling deployments, allowing applications to be updated without downtime. It gradually replaces old instances with new ones, ensuring a smooth transition. In case of issues, rollbacks can be easily performed to revert to a previous version.

- Resource Utilization and Efficiency:

- Kubernetes optimizes resource utilization by efficiently distributing containers across nodes in a cluster. It ensures that applications have the necessary resources while avoiding over-provisioning, leading to better efficiency and cost savings.

- Multi-Cloud and Hybrid Cloud Deployments:

- Kubernetes provides a consistent platform for deploying applications across various cloud providers or on-premises data centers. This flexibility makes it suitable for organizations adopting multi-cloud or hybrid cloud strategies.

- DevOps Automation:

- Kubernetes supports DevOps practices by automating many aspects of application deployment and management. It integrates with continuous integration and continuous deployment (CI/CD) tools, streamlining the software development lifecycle.

- Stateful Applications:

- While initially designed for stateless applications, Kubernetes has evolved to support stateful applications as well. StatefulSets and Persistent Volumes enable the deployment of databases, key-value stores, and other stateful workloads.

- Batch Processing and Jobs:

- Kubernetes can be used for running batch processing jobs or scheduled tasks. It manages the execution of jobs, ensuring that they run to completion and providing features like job parallelism and restart policies.

- Logging and Monitoring:

- Kubernetes integrates with logging and monitoring solutions to provide insights into the performance and health of applications. Popular tools like Prometheus and Grafana can be used to monitor the Kubernetes cluster and applications running on it.

- Container Network Policies:

- Kubernetes allows the definition of network policies to control the communication between pods. This enhances security by specifying rules for network traffic, restricting unauthorized access between different components of an application.

- Edge Computing:

- Kubernetes can be extended to support edge computing scenarios where applications run on distributed nodes in remote locations. This is useful for deploying and managing applications in edge environments with limited resources.

- Machine Learning and AI Workloads:

- Kubernetes is used for deploying and managing machine learning (ML) and artificial intelligence (AI) workloads. It provides a scalable and resource-efficient platform for running training jobs, inference engines, and distributed ML applications.

- Internet of Things (IoT):

- In IoT deployments, Kubernetes can be used to manage and orchestrate containerized applications on edge devices. This allows for efficient deployment and management of applications in distributed IoT environments.

Kubernetes has become a fundamental technology in the containerization and cloud-native ecosystem, and its versatility makes it applicable to a wide range of use cases, from small-scale applications to large, complex distributed systems.

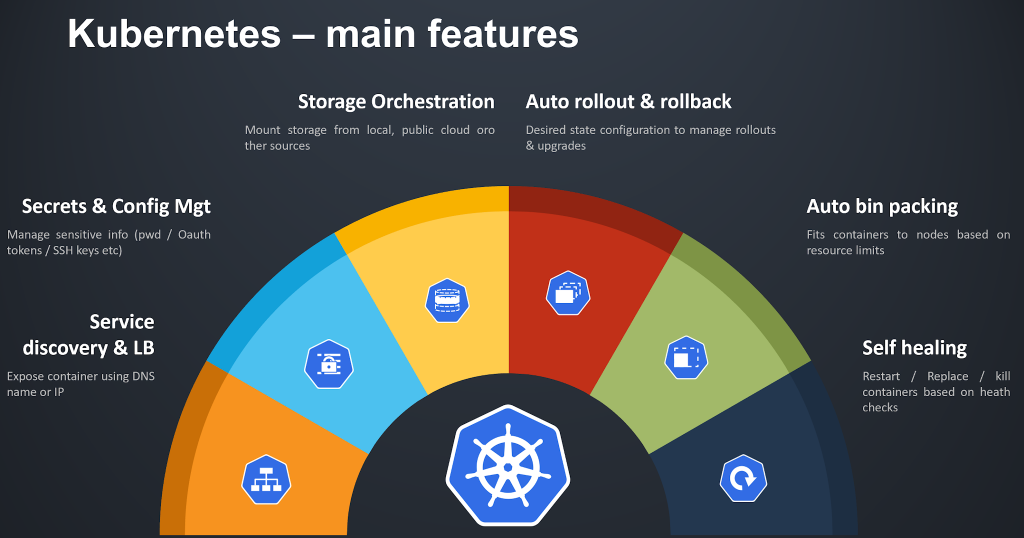

What are feature of Kubernetes?

Features of Kubernetes:

- Container Orchestration:

- Kubernetes motorizes the deployment, scaling, and management of containerized applications. It abstracts the underlying infrastructure, providing a unified platform for orchestrating containers.

- Automated Load Balancing:

- Kubernetes includes built-in load balancing to distribute traffic evenly across containers or pods. This ensures optimal utilization of resources and high availability of applications.

- Self-Healing:

- Kubernetes monitors the health of containers and can automatically restart or replace failed containers. This self-healing capability helps maintain the desired state of the application.

- Declarative Configuration:

- Configuration in Kubernetes is specified declaratively through YAML or JSON files. Users define the desired state of the system, and Kubernetes takes care of bringing the actual state in line with the declared state.

- Service Discovery and DNS:

- Kubernetes automatically assigns DNS names and IP addresses to services, facilitating service discovery. This enables components to communicate with each other using well-defined names.

- Rolling Deployments and Rollbacks:

- Kubernetes supports rolling deployments, allowing updates to be applied gradually without downtime. If issues arise, rollbacks can be initiated to revert to the previous stable version.

- Horizontal Scaling:

- Kubernetes enables automatic scaling of applications by adjusting the number of replicas based on resource utilization or custom metrics. This ensures that applications can manage varying levels of traffic.

- Storage Orchestration:

- Kubernetes manages storage volumes and enables dynamic provisioning of storage resources for containers. It supports various storage solutions, including local storage, network-attached storage (NAS), and cloud storage.

- Secrets and Configuration Management:

- Kubernetes provides a secure way to manage sensitive information, such as passwords and API keys, through Secrets. It also supports ConfigMaps for managing configuration data separately from application code.

- Multi-Cloud and Hybrid Cloud Support:

- Kubernetes is designed to be cloud-agnostic, allowing applications to run seamlessly across different cloud providers or on-premises data centers. This flexibility supports multi-cloud and hybrid cloud deployments.

- Role-Based Access Control (RBAC):

- Kubernetes enforces access control policies through RBAC. This allows administrators to define roles and permissions for users and services, ensuring secure access to cluster resources.

- Container Networking:

- Kubernetes manages networking between containers and pods. It assigns unique IP addresses to each pod and ensures communication between pods within the same cluster, even across different nodes.

- Batch Execution and Jobs:

- Kubernetes supports batch processing and job execution. Users can define jobs that run to completion, and Kubernetes ensures their execution, parallelism, and restart policies.

- Monitoring and Logging Integration:

- Kubernetes integrates with monitoring and logging solutions to provide visibility into the performance and health of applications. Popular tools like Prometheus and Grafana are commonly used for monitoring Kubernetes clusters.

- Custom Resource Definitions (CRDs):

- Kubernetes allows users to define custom resource types and controllers through Custom Resource Definitions (CRDs). This extensibility enables the integration of custom applications and components.

What is the workflow of Kubernetes?

Workflow of Kubernetes:

- Define Application Configuration:

- Users define the desired state of their applications and infrastructure using configuration files (YAML or JSON). This includes specifications for containers, pods, services, and other resources.

- Deploy Application:

- Use the

kubectlcommand-line tool or declarative configuration files to deploy the application to the Kubernetes cluster. Kubernetes takes care of scheduling containers, creating pods, and managing resources.

- Use the

- Kubernetes API Server:

- The Kubernetes API server receives requests and configuration from users, clients, or controllers. It validates and processes these requests to ensure they align with the desired state.

- Controller Managers:

- Controller managers are components that continually work to bring the cluster’s actual state in line with the desired state. They manage controllers responsible for tasks such as replication, endpoints, and nodes.

- Etcd:

- Etcd is a distributed key-value store that stores the configuration data and the state of the entire Kubernetes cluster. It serves as the source of truth for the cluster’s current state.

- Scheduler:

- The Kubernetes scheduler is responsible for assigning pods to nodes based on resource requirements, affinity rules, and other constraints. It optimizes resource utilization and ensures even distribution.

- Kubelet:

- Kubelet is an agent running on each node in the cluster. It communicates with the Kubernetes API server and ensures that containers within pods are running and healthy on the node.

- Container Runtime:

- Kubernetes supports various container runtimes, with Docker being one of the most commonly used. The container runtime is responsible for pulling container images, generating containers, and handling their lifecycle.

- Service Discovery and Load Balancing:

- Kubernetes assigns stable IP addresses and DNS names to services. This facilitates service discovery, and load balancing ensures that traffic is evenly distributed among instances of a service.

- Monitoring and Logging:

- Use monitoring and logging tools to observe the performance and health of the Kubernetes cluster and applications. This includes tracking resource utilization, diagnosing issues, and ensuring availability.

- Scale and Update:

- As traffic or demand changes, scale the application horizontally by adjusting the number of replicas. Kubernetes can also handle rolling updates to deploy new versions of applications without downtime.

- Security Configuration:

- Implement security measures such as RBAC, network policies, and Secrets to secure the Kubernetes cluster and applications. Regularly update and patch the cluster to address security vulnerabilities.

- Backup and Recovery:

- Set up backup and recovery procedures to ensure the resilience of data and configurations. This involves periodic snapshots, backups of etcd, and a clear recovery strategy in case of failures.

- Continuous Integration/Continuous Deployment (CI/CD):

- Integrate Kubernetes with CI/CD pipelines to automate the building, testing, and deployment of applications. CI/CD tools can trigger updates and rollouts based on changes to the application code.

- Custom Resource Definitions (CRDs):

- For advanced use cases, define custom resources and controllers using CRDs. This allows users to extend the capabilities of Kubernetes to meet specific requirements.

Understanding the workflow of Kubernetes involves defining the desired state of applications, deploying them to the cluster, and leveraging Kubernetes components to ensure that the cluster operates efficiently, securely, and in accordance with the specified configurations.

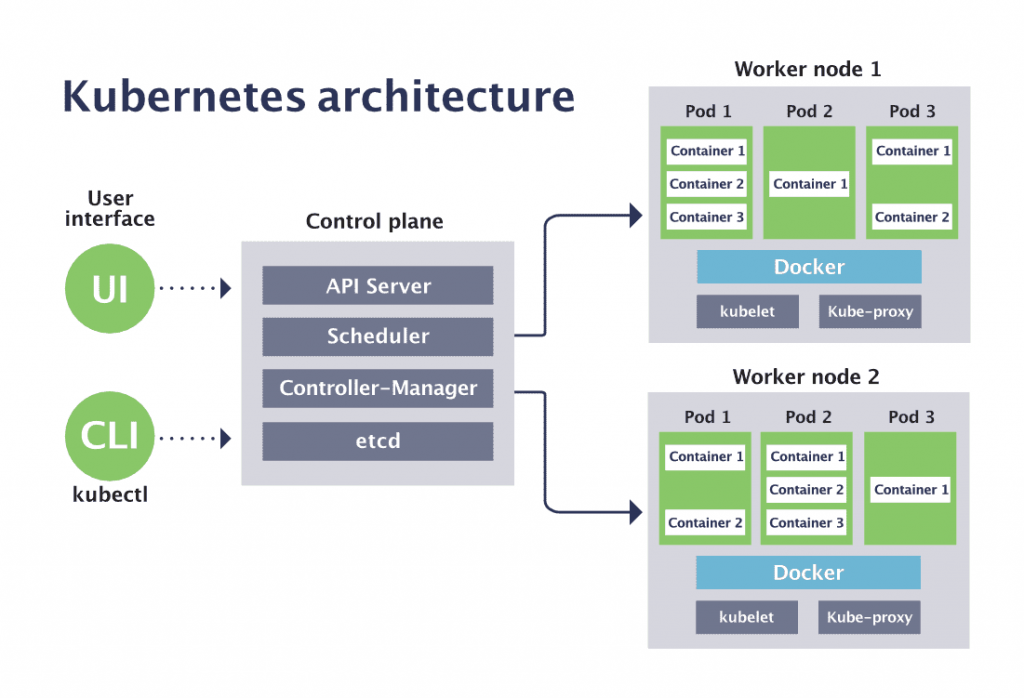

How Kubernetes Works & Architecture?

Kubernetes is a container orchestration platform that manages and automates the deployment, scaling, and networking of containerized applications. It’s like a conductor for your containerized orchestra, ensuring everything runs smoothly and efficiently.

How it Works:

- Containerization: Applications are packaged in containers, isolated units with their own runtime environment and dependencies.

- Deployment: You define your application’s desired state in YAML files, specifying pods (groups of containers), deployments (replicated sets of pods), and services (abstractions for accessing pods).

- Cluster Management: Kubernetes runs on a cluster of nodes (machines) that host your containerized applications. The control plane manages the cluster, while worker nodes run the containers.

- Scheduling and Scaling: Kubernetes schedules pods across available nodes based on resource requirements and constraints. It also automatically scales pods up or down based on defined metrics, ensuring optimal resource utilization.

- Self-Healing: Kubernetes automatically restarts failed containers and reschedules them on healthy nodes, ensuring high availability of your applications.

- Load Balancing: Services act as abstractions for accessing pods, providing load balancing and service discovery for your applications.

Architecture:

Kubernetes has a layered architecture:

- Control Plane:

- API Server: Exposes the Kubernetes API for managing the cluster.

- Scheduler: Decides where to run pods based on resource availability and constraints.

- Controller Manager: Manages various cluster components like deployments, services, and nodes.

- etcd: Distributed key-value store for storing cluster state.

- Worker Nodes:

- kubelet: Runs on each node, managing pods and communicating with the control plane.

- Container Runtime: Runs container images and manages their lifecycle (e.g., Docker, containerd).

Key Benefits:

- Scalability: Easily scale your applications up or down based on demand.

- High Availability: Kubernetes self-heals and ensures your applications are always available.

- Portability: Run your applications on any cloud or on-premises infrastructure.

- Flexibility: Define your application’s desired state and let Kubernetes handle the rest.

- Increased Efficiency: Optimize resource utilization and reduce operational overhead.

Use Cases:

- Microservices Architecture: Deploy and manage microservices applications efficiently.

- Continuous Integration and Delivery (CI/CD): Automate deployments and rollbacks for faster development cycles.

- Cloud-Native Applications: Build and run scalable applications on any cloud platform.

How to Install and Configure Kubernetes?

Following is a guide on installing and configuring Kubernetes, covering different approaches:

I. Local Development (Single-Node Cluster):

- Minikube:

- Easy setup for local testing and development.

- Install Minikube from their official website.

- Start a cluster:

minikube start

- Docker Desktop:

- Built-in Kubernetes support if using Docker Desktop.

- Enable Kubernetes in Docker Desktop settings.

II. Cloud-Managed Services:

- Google Kubernetes Engine (GKE):

- Fully managed Kubernetes on Google Cloud Platform.

- Create a cluster through the GCP console or CLI.

- Amazon Elastic Kubernetes Service (EKS):

- Managed Kubernetes on Amazon Web Services.

- Create a cluster through the AWS console or CLI.

- Azure Kubernetes Service (AKS):

- Managed Kubernetes on Microsoft Azure.

- Create a cluster through the Azure portal or CLI.

III. Manual Installation (On-Premises or Custom Cloud):

- Prerequisites: Multiple Linux servers (virtual or physical).

- Install Kubeadm: A tool for cluster setup.

- Initialize Master Node:

kubeadm init - Join Worker Nodes:

kubeadm join

IV. Development Tools:

- kubectl: Command-line tool for interacting with clusters.

- Helm: Package manager for Kubernetes applications.

Configuration:

- Networking: Configure pod networking (e.g., Flannel, Calico).

- Security: Implement role-based access control (RBAC), network policies.

- Resource Management: Set resource limits and requests for pods.

- Storage: Configure persistent volumes for data storage.

Fundamental Tutorials of Kubernetes: Getting started Step by Step

Building your understanding of Kubernetes is exciting! Here’s a step-by-step fundamental tutorial to get you started:

1. Choose your environment:

- Minikube: Best for beginners, quick local test environment.

- Docker Desktop: Easy if you already use Docker, built-in Kubernetes support.

- Cloud-managed service: GKE, EKS, AKS offer a seamless experience with infrastructure managed for you.

2. Set up your cluster:

- Minikube:

- Download and install Minikube for your OS from their official website.

- Start a cluster with

minikube start

- Docker Desktop:

- Enable Kubernetes in your Docker Desktop settings.

- Cloud-managed service:

- Create a cluster through the respective provider’s console or CLI.

3. Meet kubectl:

kubectlis your command-line companion for interacting with your cluster.- Check if it’s working:

kubectl get nodes(should show your cluster node).

4. Deploy your first Pod:

- A Pod is the basic unit of deployment in Kubernetes, holding one or more containers.

- Create a simple Pod manifest file (e.g.,

pod.yaml) specifying an image (e.g.,nginx). - Use

kubectl apply -f pod.yamlto deploy your Pod. - Verify with

kubectl get pods(should show your Pod running).

5. Access your Pod:

- Find the Pod’s internal IP address with

kubectl get pods -o wide. - Use

kubectl port-forward <pod-name> <local-port>:<target-port>to map the Pod’s port to your local machine. - Open the local port in your browser (e.g., http://localhost:8080) to access your application exposed by the Pod.

6. Explore Services:

- Services provide a stable endpoint for accessing multiple Pods (load balancing).

- Create a Service manifest (

service.yaml) referencing your Pod. - Deploy the Service with

kubectl apply -f service.yaml. - Use the Service’s cluster IP address (found with

kubectl get services) to access your application from other Pods within the cluster.

7. Scale your application:

- Edit your Deployment manifest to increase the number of replicas.

- Re-apply the Deployment with

kubectl apply -f deployment.yaml. - Kubernetes automatically scales your application up!

This is just a starting point, and there’s so much more to discover in the fascinating world of Kubernetes. Keep exploring, experimenting, and enjoy the journey!

- PPG Industries: Selection and Interview process, Questions/Answers - April 3, 2024

- Fiserv: Selection and Interview process, Questions/Answers - April 3, 2024

- Estee Lauder: Selection and Interview process, Questions/Answers - April 3, 2024