Container Runtime Interface (CRI)

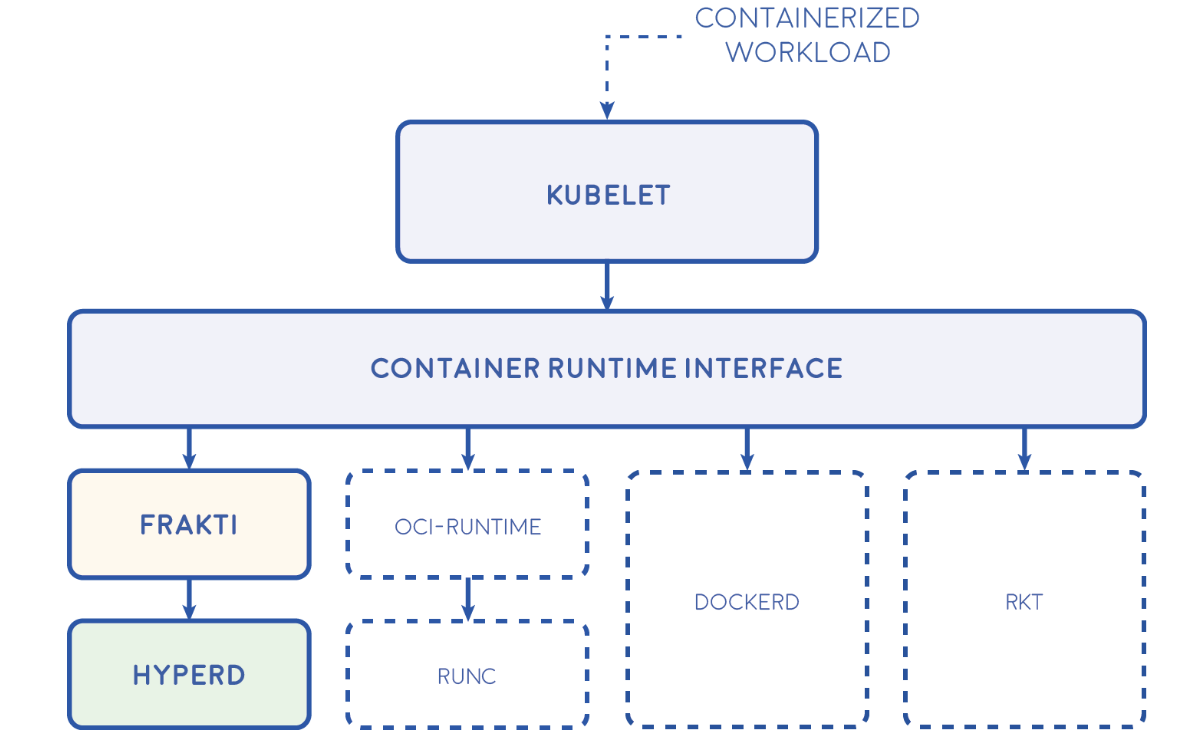

Container runtime interface (CRI) is a plugin interface that lets the kubelet—an agent that runs on every node in a Kubernetes cluster—use more than one type of container runtime. At the lowest layers of a Kubernetes node is the software that, among other things, starts and stops containers. We call this the “Container Runtime”. The most widely known container runtime is Docker, but it is not alone in this space.

Kubelet communicates with the container runtime (or a CRI shim for the runtime) over Unix sockets using the gRPC framework, where kubelet acts as a client and the CRI shim as the server.

The Container Runtime Interface (CRI) — a plugin interface which enables kubelet to use a wide variety of container runtimes, without the need to recompile. CRI consists of a protocol buffers and gRPC API, and libraries, with additional specifications and tools under active development. CRI is being released as Alpha in Kubernetes 1.5.

Why Does Kubernetes Need CRI?

To understand the need for CRI in Kubernetes, let’s start with a few basic concepts:

- kubelet—the kubelet is a daemon that runs on every Kubernetes node. It implements the pod and node APIs that drive most of the activity within Kubernetes.

- Pods—a pod is the smallest unit of reference within Kubernetes. Each pod runs one or more containers, which together form a single functional unit.

- Pod specs—the kubelet read pod specs, usually defined in YAML configuration files. The pod specs say which container images the pod should run. It provides no details as to how containers should run—for this, Kubernetes needs a container runtime.

- Container runtime—a Kubernetes node must have a container runtime installed. When the kubelet wants to process pod specs, it needs a container runtime to create the actual containers. The runtime is then responsible for managing the container lifecycle and communicating with the operating system kernel.

What are runc and the Open Container Initiative (OCI)?

The Open Container Initiative (OCI) provides a set of industry practices that standardize the use of container image formats and container runtimes. CRI only supports container runtimes that are compliant with the Open Container Initiative.

The OCI provides specifications that must be implemented by container runtime engines. Two important specifications are:

- runc—a seed container runtime engine. The majority of modern container runtime environments use runc and develop additional functionality around this seed engine.

- OCI image specification—OCI adopted the original Docker image format as the basis for the OCI image specification. The majority of open source build tools support this format, including BuildKit, Podman, and Buildah. Container runtimes that implement the OCI runtime specification can unbundle OCI images and run its content as a container.

Does Docker Support CRI?

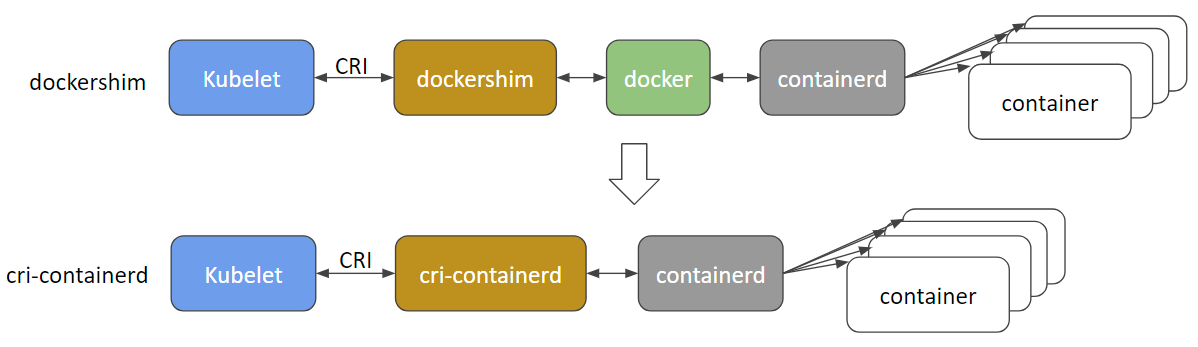

The short answer is no. In the past, Kubernetes included a bridge called dockershim, which enabled Docker to work with CRI. From v1.20 onwards, dockershim will not be maintained, meaning that Docker is now deprecated in Kubernetes. Kubernetes currently plans to remove support for Docker entirely in a future version, probably v1.22.

Container RuntimeSupport in Kubernetes PlatformsProsConsContainerdGoogle Kubernetes Engine, IBM Kubernetes Service, AlibabaTested at huge scale, used in all Docker containers. Uses less memory and CPU than Docker.Supports Linux and WindowsNo Docker API socket.Lacks Docker’s convenient CLI tools.CRI-ORed Hat OpenShift, SUSE Container as a ServiceLightweight, all the features needed by Kubernetes and no more.UNIX-like separation of concerns (client, registry, build)Mainly usage within Red Hat platformsNot easy to install on non Red Hat operating systemsOnly supported in Windows Server 2019 and laterKata ContainersOpenStackProvides full virtualization based on QEMUImproved securityIntegrates with Docker, CRI-O, containerd, and FirecrackerSupports ARM, x86_64, AMD64Higher resource utilizationNot suitable for lightweight container use casesAWS FirecrackerAll AWS servicesAccessible via direct API or containerdTight kernel access using seccomp jailerNew project, less mature than other runtimesRequires more manual steps, developer experience still in flux

The following table shows the most common container runtime environments that support CRI, and thus can be used within Kubernetes, their support in managed Kubernetes platforms, and their pros and cons.

| Container Runtime | Support in Kubernetes Platforms | Pros | Cons |

| Containerd | Google Kubernetes Engine, IBM Kubernetes Service, Alibaba | Tested at huge scale, used in all Docker containers. Uses less memory and CPU than Docker.Supports Linux and Windows | No Docker API socket.Lacks Docker’s convenient CLI tools. |

| CRI-O | Red Hat OpenShift, SUSE Container as a Service | Lightweight, all the features needed by Kubernetes and no more.UNIX-like separation of concerns (client, registry, build) | Mainly usage within Red Hat platformsNot easy to install on non Red Hat operating systemsOnly supported in Windows Server 2019 and later |

| Kata Containers | OpenStack | Provides full virtualization based on QEMUImproved securityIntegrates with Docker, CRI-O, containerd, and FirecrackerSupports ARM, x86_64, AMD64 | Higher resource utilizationNot suitable for lightweight container use cases |

| AWS Firecracker | All AWS services | Accessible via direct API or containerdTight kernel access using seccomp jailer | New project, less mature than other runtimesRequires more manual steps, developer experience still in flux |

OLD Content start from here

Before we deep dive further into container runtimes projects, let’s see what containers actually are, Hows its been created?

What is a container?

- Container Image – Container images is nothing but tarballs with a JSON configuration file attached. Usually this has multiple layers(filesystem) on top of each other. Each layer(filesystem) has files related to base os to applications which you are going to run inside a containers.

- Container Image registry – A container is created from a container image. The image from usually some “container registry” that exposes the metadata and the files for download over a simple HTTP-based protocol. e.g Dockerhub by Docker, Red Hat has one for its OpenShift project, Microsoft has one for Azure, ECR on aws and GitLab has one for its continuous integration platform.

- Container – The runtime extracts that layered image onto a copy-on-write (CoW) filesystem. This is usually done using an overlay filesystem, where all the container layers overlay each other to create a merged filesystem. Finally, the runtime actually executes the container, which means telling the kernel to assign resource limits, create isolation layers (for processes, networking, and filesystems), and so on, using a cocktail of mechanisms like control groups (cgroups), namespaces, capabilities, seccomp, AppArmor, SELinux, and whatnot. For Docker, docker run is what creates and runs the container, but underneath it actually calls the runc command.

The architecture of running the container specification is followed by The Open Container Initiative (OCI) now specifies most of the above under a few specifications:

- The Image Specification (often referred to as “OCI 1.0 images”) which defines the content of container images.

- The Runtime Specification (often referred to as CRI 1.0 or Container Runtime Interface) describes the “configuration, execution environment, and lifecycle of a container”

- The Container Network Interface (CNI) specifies how to configure network interfaces inside containers, though it was standardized under the Cloud Native Computing Foundation (CNCF) umbrella, not the OCI

Kubernetes 1.5 introduced an internal plugin API named Container Runtime Interface (CRI) to provide easy access to different container runtimes. CRI enables Kubernetes to use a variety of container runtimes without the need to recompile. In theory, Kubernetes could use any container runtime that implements CRI to manage pods, containers and container images.

Selecting a container runtime for use with Kubernetes Interfaces

- Native

- Docker

- rktnetes

- CRI

- cri-containerd

- rktlet

- cri-o

- frakti

OCI (Open Container Initiative) Compatible Runtimes

- Containers

- bwrap-oci

- crun

- railcar

- rkt

- runc

- runlxc

- Virtual Machines

- Clear Containers

- runv

There are multiple container “runtimes”, which are programs that can create and execute containers that are typically fetched from images. That space is slowly reaching maturity both in terms of standards and implementation.

The Docker

Docker’s containerd 1.0 was released during KubeCon March 13, 2013. Originally, Docker used LXC but its isolation layers were incomplete, so Docker wrote libcontainer, which eventually became runc. With the introduction of Kubernetes in 2014, which naturally used Docker, as Docker was the only runtime available at the time. But Docker is an ambitious company and kept on developing new features on its own. Docker Compose, for example, reached 1.0 at the same time as Kubernetes. While there are ways to make the two tools interoperate using tools such as Kompose, Docker is often seen as a big project doing too many things. This situation led CoreOS to release a simpler, standalone runtime in the form of rkt.

The RKT(Rocket)

rkt is an application container engine developed for modern production cloud-native environments by coreos. It features a pod-native approach, a pluggable execution environment, and a well-defined surface area that makes it ideal for integration with other systems. The core execution unit of rkt is the pod, a collection of one or more applications executing in a shared context (rkt’s pods are synonymous with the concept in the Kubernetes orchestration system).

Since its introduction by CoreOS in December 2014, the rkt project has greatly matured and is widely used. It is available for most major Linux distributions and every rkt release builds self-contained rpm/deb packages that users can install. These packages are also available as part of the Kubernetes repository to enable testing of the rkt + Kubernetes integration.

CRI-O

CRI-O 1.0 was released a few months ago, and

Started in late 2016 by Red Hat for its OpenShift platform, the project also benefits from support by Intel and SUSE, according to Mrunal Patel, lead CRI-O developer at Red Hat who hosted the talk. CRI-O is compatible with the CRI (runtime) specification and the OCI and Docker image formats. It can also verify image GPG signatures. It uses the CNI package for networking and supports CNI plugins, which OpenShift uses for its software-defined networking layer. It supports multiple CoW filesystems, like the commonly used overlay and aufs, but also the less common Btrfs.

cri-containerd

Over the past 6 months, engineers from Google, Docker, IBM, ZTE, and ZJU have worked to implement CRI for containerd. The project is called cri-containerd, which had its feature complete v1.0.0-alpha.0 release on September 25, 2017. With cri-containerd, users can run Kubernetes clusters using containerd as the underlying runtime without Docker installed.

Containerd – Containerd is an OCI compliant core container runtime designed to be embedded into larger systems. It provides the minimum set of functionality to execute containers and manages images on a node. It was initiated by Docker Inc. and donated to CNCF in March of 2017. The Docker engine itself is built on top of earlier versions of containerd, and will soon be updated to the newest version. Containerd is close to a feature complete stable release, with 1.0.0-beta.1 available right now.

cri-containerd – Cri-containerd is exactly that: an implementation of CRI for containerd. It operates on the same node as the Kubelet and containerd. Layered between Kubernetes and containerd, cri-containerd handles all CRI service requests from the Kubelet and uses containerd to manage containers and container images. Cri-containerd manages these service requests in part by forming containerd service requests while adding sufficient additional function to support the CRI requirements.

Cri-containerd uses containerd to manage the full container lifecycle and all container images. As also shown below, cri-containerd manages pod networking via CNI (another CNCF project).

LXD

LXD is a next generation system container manager. It offers a user experience similar to virtual machines but using Linux containers instead. It’s image based with pre-made images available for a wide number of Linux distributions and is built around a very powerful, yet pretty simple, REST API.

Some of the biggest features of LXD are:

- Secure by design (unprivileged containers, resource restrictions and much more)

- (from containers on your laptop to thousand of compute nodes)

- Intuitive (simple, clear API and crisp command line experience)

- Image based (with a wide variety of Linux distributions published daily)

- Support for Cross-host container and image transfer (including live migration with CRIU)

- Advanced resource control (cpu, memory, network I/O, block I/O, disk usage and kernel resources)

- Device passthrough (USB, GPU, unix character and block devices, NICs, disks and paths)

- Network management (bridge creation and configuration, cross-host tunnels, …)

- Storage management (support for multiple storage backends, storage pools and storage volumes)

Java containers

Jetty, Tomcat, Wildfy and Springboot are all examples of container technologies that enable standalone Java applications. They have been used for years to incorporate parts of the Java runtime into the app itself. The result is a Java app that can run without requiring an external Java environment, making the app containerised. While Java containers are indeed container technologies they are not a variant of the Linux containers that are associated with the Docker hype.

Unikernels

Applications that you commonly find running in a Docker container may require only a fraction of the advanced capabilities offered by the virtualised environment they run in. Unikernels as designed to optimise the resources required by a container at runtime: by mapping runtime dependencies of the application and only packaging the system functionality that is needed at runtime into a single image. Unlike Docker containers, Unikernels can boot and run entirely on their own, without a host OS or external libraries. That’s different from Docker containers, which rely on external resources and a host environment to run. Unikernels can reduce complexity, improve portability and reduce the attack surface of applications however they require new development and deployment tooling which is still emerging.

OpenVZ:

A container platform for running complete operating systems. OpenVZ is different to a traditional virtual machine hypervisor because it requires both the host and guest OS to be running Linux but because it shares the host OS kernel (like Linux containers) OpenVZ containers are much faster and more efficient than traditional hypervisors. OpenVZ also happens to be one of the oldest container platforms still in use today, with roots going back to 2005.

Windows Server Containers:

The recent launch of Windows Server 2016 has brought the benefits of Linux containers to Microsoft workloads. Microsoft has re-engineered the core windows OS to enable container technology and worked closely with Docker to ensure parity in the Docker management tooling. There is still some work to do on optimising the size of the container images and they will only run on Windows 10, Server 2016 or Azure but this is great news for Microsoft based engineering teams.

Hyper-V Containers:

Greater security can be achieved by hosting Windows Server Containers in a lightweight “Hyper-V” virtual machines. This brings a higher degree of resource isolation but at the cost of efficiency and density on the host. Hyper-V containers would be used when the trust boundaries on the host OS require additional security. Hyper-V containers are built and managed in exactly the same way as Windows Servers Containers and therefore Docker containers.

The hypervisor-based container runtime for Kubernetes. Frakti lets Kubernetes run pods and containers directly inside hypervisors via runV. It is light weighted and portable, but can provide much stronger isolation with independent kernel than linux-namespace-based container runtimes. Frakti serves as a CRI container runtime server. Its endpoint should be configured while starting kubelet. In the deployment, hyperd is also required as the API wrapper of runV.

Refernece

https://kubernetes.io/docs/setup/cri/

https://github.com/kubernetes-sigs/cri-o

https://lwn.net/Articles/741897/

https://joejulian.name/post/kubernetes-container-engine-comparison/

https://www.ianlewis.org/en/container-runtimes-part-1-introduction-container-r

https://github.com/Friz-zy/awesome-linux-containers

https://medium.com/cri-o/container-runtimes-clarity-342b62172dc3

https://docs.openshift.com/container-platform/3.11/crio/crio_runtime.html

https://www.redhat.com/en/blog/introducing-cri-o-10

- How to remove sensitive warning from ms office powerpoint - July 14, 2024

- AIOps and DevOps: A Powerful Duo for Modern IT Operations - July 14, 2024

- Leveraging DevOps and AI Together: Benefits and Synergies - July 14, 2024