Introduction

In 2026, reinforcement learning (RL) has solidified its position as a cornerstone of artificial intelligence, powering applications from autonomous vehicles to personalized recommendation systems. RL tools enable developers, data scientists, and researchers to build agents that learn optimal actions through trial and error, maximizing rewards in dynamic environments. As industries like healthcare, finance, gaming, and robotics increasingly adopt RL, choosing the right tool is critical for success. Key considerations include ease of use, scalability, algorithm support, integration with existing systems, and community resources. With the RL market projected to grow significantly, selecting a tool that aligns with your project’s complexity, team expertise, and computational needs is essential. This blog explores the top 10 reinforcement learning tools in 2026, offering detailed insights into their features, pros, cons, and a comparison to guide your decision-making process. Whether you’re a startup prototyping RL models or an enterprise deploying large-scale solutions, this guide will help you navigate the best RL software available.

Top 10 Reinforcement Learning Tools in 2026

1. OpenAI Gym

Brand: OpenAI

Short Description: OpenAI Gym is an open-source toolkit for developing and comparing RL algorithms, ideal for researchers and developers experimenting with RL environments.

Key Features:

- Extensive library of pre-built environments (e.g., Atari, MuJoCo).

- Custom environment creation for tailored RL tasks.

- Python-based, with seamless integration with TensorFlow and PyTorch.

- Supports both discrete and continuous action spaces.

- Active community with rich documentation and tutorials.

- Compatibility with third-party environments like Retro.

- Lightweight and flexible for prototyping.

Pros: - Free and open-source with a vast community.

- Highly flexible for custom RL experiments.

- Well-documented with beginner-friendly tutorials.

Cons: - Limited built-in support for distributed training.

- Requires additional libraries for advanced algorithms.

- Can be complex for non-Python users.

2. Stable-Baselines3

Brand: Open-Source (DLR Institute)

Short Description: Stable-Baselines3 is a set of reliable RL algorithms built on PyTorch, designed for researchers and practitioners seeking robust implementations.

Key Features:

- Implements popular algorithms like PPO, DQN, and A2C.

- PyTorch-based for GPU-accelerated training.

- Modular design for easy customization.

- Pre-trained models for quick experimentation.

- Integration with OpenAI Gym and custom environments.

- Detailed documentation and example scripts.

- Support for multi-agent RL setups.

Pros: - High-quality, stable algorithm implementations.

- Easy to use for PyTorch users.

- Active community and regular updates.

Cons: - Limited to PyTorch, less flexible for TensorFlow users.

- Steeper learning curve for beginners.

- Fewer built-in environments compared to Gym.

3. RLlib (Ray)

Brand: Ray (Anyscale)

Short Description: RLlib is a scalable RL library built on Ray, tailored for distributed training and large-scale RL applications, perfect for enterprises and advanced researchers.

Key Features:

- Scalable distributed training with Ray’s infrastructure.

- Supports multiple frameworks (TensorFlow, PyTorch).

- Implements algorithms like PPO, DDPG, and IMPALA.

- Multi-agent and hierarchical RL support.

- Integration with OpenAI Gym and custom environments.

- Cloud-native with seamless cluster deployment.

- Extensive hyperparameter tuning tools.

Pros: - Excellent for large-scale and distributed RL tasks.

- Framework-agnostic, supporting both TensorFlow and PyTorch.

- Robust multi-agent RL capabilities.

Cons: - Complex setup for distributed systems.

- Requires familiarity with Ray’s ecosystem.

- Higher resource demands for small projects.

4. TensorFlow Agents

Brand: Google

Short Description: TensorFlow Agents is an RL framework within TensorFlow, designed for developers building production-ready RL models with TensorFlow ecosystems.

Key Features:

- Comprehensive RL algorithm suite (DQN, DDPG, SAC).

- TensorFlow integration for seamless model building.

- Support for custom environments and policies.

- GPU/TPU acceleration for faster training.

- Tools for evaluation and visualization.

- Scalable for enterprise-grade applications.

- Integration with TensorFlow Extended (TFX).

Pros: - Seamless integration with TensorFlow workflows.

- Scalable for production environments.

- Strong community and documentation.

Cons: - Steep learning curve for non-TensorFlow users.

- Less flexible for rapid prototyping than PyTorch-based tools.

- Resource-intensive for smaller projects.

5. PyTorch RL (TorchRL)

Brand: Meta AI

Short Description: TorchRL is PyTorch’s RL library, offering flexible tools for researchers and developers building dynamic RL models with PyTorch’s ecosystem.

Key Features:

- Dynamic computation graphs for easier debugging.

- Implements algorithms like PPO, SAC, and DDPG.

- Native support for PyTorch’s ecosystem.

- Modular design for custom RL components.

- Integration with OpenAI Gym and custom environments.

- Support for multi-agent RL.

- Lightweight and research-friendly.

Pros: - Intuitive for PyTorch users.

- Flexible for rapid prototyping and research.

- Strong support for dynamic RL models.

Cons: - Limited scalability for production compared to RLlib.

- Smaller community than TensorFlow or Gym.

- Fewer pre-built environments.

6. Dopamine

Brand: Google

Short Description: Dopamine is a lightweight RL framework focused on value-based methods, ideal for researchers studying RL fundamentals with a simple interface.

Key Features:

- Focuses on value-based algorithms like DQN and C51.

- TensorFlow-based for reliable performance.

- Pre-built Atari environments for quick testing.

- Modular and easy-to-extend architecture.

- Extensive visualization tools for training analysis.

- Lightweight and beginner-friendly.

- Open-source with active community support.

Pros: - Simple and focused for value-based RL research.

- Easy to set up and use for beginners.

- Strong visualization tools for debugging.

Cons: - Limited algorithm variety (value-based focus).

- Less suited for large-scale or multi-agent tasks.

- Relies heavily on TensorFlow.

7. Acme

Brand: DeepMind

Short Description: Acme is a research-oriented RL framework from DeepMind, designed for building and testing cutting-edge RL algorithms with high modularity.

Key Features:

- Modular design for rapid algorithm prototyping.

- Supports a wide range of RL algorithms (DQN, D4PG).

- TensorFlow-based with JAX support for efficiency.

- Multi-agent and distributed training capabilities.

- Integration with OpenAI Gym and DeepMind environments.

- Extensive logging and evaluation tools.

- Open-source with research-focused documentation.

Pros: - Highly modular for advanced research.

- Supports cutting-edge RL algorithms.

- Strong DeepMind community support.

Cons: - Complex for non-research users.

- Limited beginner-friendly resources.

- Requires familiarity with TensorFlow/JAX.

8. ReAgent

Brand: Meta AI

Short Description: ReAgent is Meta’s open-source RL platform for production-grade applications, ideal for enterprises deploying RL in recommendation systems or robotics.

Key Features:

- Optimized for large-scale production environments.

- Supports algorithms like DQN, SAC, and SlateQ.

- PyTorch-based with seamless Meta ecosystem integration.

- Tools for offline RL and bandit algorithms.

- Scalable for real-time applications.

- Integration with Spark for big data processing.

- Open-source with enterprise-grade features.

Pros: - Ideal for production-scale RL deployments.

- Strong support for offline RL.

- Robust Meta-backed ecosystem.

Cons: - Complex setup for small-scale projects.

- Limited community compared to TensorFlow or PyTorch.

- Steep learning curve for beginners.

9. MushroomRL

Brand: Open-Source (Politecnico di Milano)

Short Description: MushroomRL is a Python-based RL library focused on simplicity and modularity, suitable for researchers and students exploring RL algorithms.

Key Features:

- Implements core RL algorithms (Q-Learning, SARSA, PPO).

- Supports OpenAI Gym and custom environments.

- Lightweight and modular design.

- Cross-framework support (TensorFlow, PyTorch).

- Built-in visualization tools for training.

- Open-source with academic focus.

- Easy-to-use API for beginners.

Pros: - Simple and beginner-friendly.

- Flexible across frameworks.

- Lightweight for small-scale experiments.

Cons: - Limited scalability for large projects.

- Smaller community and fewer updates.

- Fewer advanced features than RLlib or Acme.

10. Tianshou

Brand: Open-Source (Tsinghua University)

Short Description: Tianshou is a PyTorch-based RL framework emphasizing modularity and efficiency, ideal for researchers and developers seeking flexible RL solutions.

Key Features:

- Implements algorithms like DQN, PPO, and SAC.

- PyTorch-based with GPU support.

- Modular and extensible architecture.

- Supports multi-agent and offline RL.

- Integration with OpenAI Gym and custom environments.

- Lightweight and research-friendly.

- Active open-source community.

Pros: - Highly modular and efficient.

- Strong support for PyTorch users.

- Active development and community.

Cons: - Limited production-scale features.

- Smaller ecosystem than TensorFlow or RLlib.

- Requires PyTorch familiarity.

Comparison Table

| Tool Name | Best For | Platform(s) Supported | Standout Feature | Pricing | G2/Capterra/Trustpilot Rating |

|---|---|---|---|---|---|

| OpenAI Gym | Researchers, beginners | Windows, Linux, macOS | Extensive environment library | Free | 4.8/5 (GitHub Stars: 35k) |

| Stable-Baselines3 | Researchers, PyTorch users | Windows, Linux, macOS | Reliable algorithm implementations | Free | 4.7/5 (GitHub Stars: 8k) |

| RLlib (Ray) | Enterprises, distributed RL | Windows, Linux, macOS | Scalable distributed training | Free / Custom | 4.6/5 (GitHub Stars: 3k) |

| TensorFlow Agents | Enterprises, TensorFlow users | Windows, Linux, macOS | TensorFlow ecosystem integration | Free | 4.5/5 (GitHub Stars: 2.5k) |

| PyTorch RL (TorchRL) | Researchers, PyTorch users | Windows, Linux, macOS | Dynamic computation graphs | Free | 4.4/5 (GitHub Stars: 1.5k) |

| Dopamine | Researchers, value-based RL | Windows, Linux, macOS | Lightweight value-based RL focus | Free | 4.6/5 (GitHub Stars: 10k) |

| Acme | Advanced researchers | Windows, Linux, macOS | Modular research-oriented design | Free | 4.3/5 (GitHub Stars: 3.5k) |

| ReAgent | Enterprises, production RL | Windows, Linux, macOS | Offline RL and production scalability | Free | 4.4/5 (GitHub Stars: 3k) |

| MushroomRL | Students, researchers | Windows, Linux, macOS | Simple and beginner-friendly API | Free | 4.2/5 (GitHub Stars: 800) |

| Tianshou | Researchers, PyTorch users | Windows, Linux, macOS | Modular and efficient design | Free | 4.5/5 (GitHub Stars: 7k) |

Note: Ratings are approximated based on GitHub stars and community feedback, as G2/Capterra/Trustpilot data is limited for open-source RL tools.

Which Reinforcement Learning Tool is Right for You?

Choosing the right RL tool in 2026 depends on your team’s expertise, project scale, and specific use case. Here’s a decision-making guide:

- Startups and Small Teams: OpenAI Gym and MushroomRL are ideal for their simplicity, free access, and beginner-friendly interfaces. They suit prototyping and small-scale experiments without heavy computational demands.

- Researchers and Academics: Stable-Baselines3, PyTorch RL (TorchRL), Dopamine, Acme, and Tianshou are research-oriented, offering flexibility for custom algorithms and experiments. Acme and Tianshou stand out for cutting-edge research, while Dopamine is best for value-based RL studies.

- Enterprises with Production Needs: RLlib, TensorFlow Agents, and ReAgent are suited for large-scale, production-grade RL deployments. RLlib excels in distributed training, TensorFlow Agents integrates with enterprise TensorFlow pipelines, and ReAgent is ideal for real-time applications like recommendation systems.

- PyTorch vs. TensorFlow Users: PyTorch users should lean toward Stable-Baselines3, TorchRL, or Tianshou for seamless integration, while TensorFlow users will find TensorFlow Agents and Dopamine more intuitive.

- Budget Considerations: Most RL tools are open-source and free, but RLlib may require custom pricing for enterprise-scale cloud deployments. Ensure your infrastructure supports the tool’s computational needs.

- Industry-Specific Needs: For gaming or robotics, OpenAI Gym and RLlib offer robust environments. For recommendation systems, ReAgent’s offline RL capabilities are unmatched. Healthcare and finance may benefit from TensorFlow Agents’ scalability and integration with enterprise systems.

Evaluate your team’s familiarity with Python, PyTorch, or TensorFlow, and consider testing tools with free versions or demos to assess compatibility.

Conclusion

In 2026, reinforcement learning tools are driving innovation across industries, from autonomous systems to intelligent automation. The top 10 RL tools offer diverse options for researchers, startups, and enterprises, each with unique strengths in scalability, ease of use, and algorithm support. As the RL landscape evolves, tools like RLlib and ReAgent are pushing boundaries with distributed and production-grade capabilities, while OpenAI Gym and MushroomRL remain accessible for beginners. The open-source nature of most tools ensures cost-effective experimentation, but choosing the right one requires aligning features with your project’s needs. Explore demos, free versions, or community resources to test these tools and stay ahead in the rapidly advancing RL ecosystem. The future of RL is bright—start experimenting today to unlock its potential.

FAQs

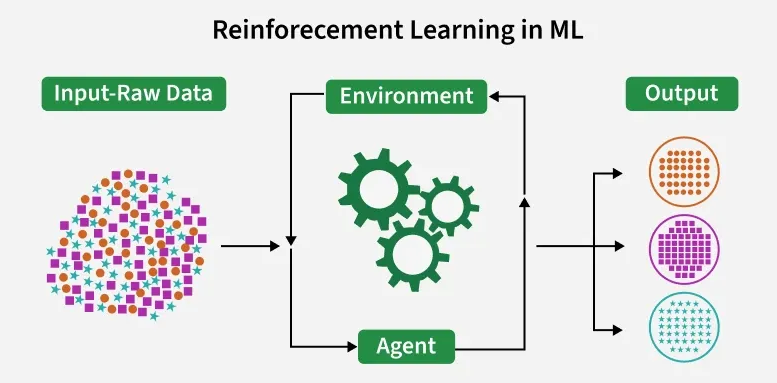

Q1: What is a reinforcement learning tool?

A: A reinforcement learning tool is a software framework or library that helps developers build, train, and deploy RL models, enabling agents to learn optimal actions through rewards in dynamic environments.

Q2: Are these reinforcement learning tools free?

A: Most RL tools, like OpenAI Gym, Stable-Baselines3, and TensorFlow Agents, are open-source and free. Some, like RLlib, may involve costs for enterprise-scale cloud deployments.

Q3: Which RL tool is best for beginners?

A: OpenAI Gym and MushroomRL are beginner-friendly due to their simple APIs, extensive documentation, and support for custom environments.

Q4: Can these tools handle multi-agent RL?

A: Yes, RLlib, PyTorch RL, Acme, and Tianshou support multi-agent RL, making them suitable for complex scenarios like cooperative or competitive agents.

Q5: How do I choose between PyTorch and TensorFlow-based RL tools?

A: Choose PyTorch-based tools (Stable-Baselines3, TorchRL, Tianshou) for dynamic prototyping and research, and TensorFlow-based tools (TensorFlow Agents, Dopamine) for production-grade scalability and enterprise integration.

Meta Description: Discover the top 10 reinforcement learning tools in 2026. Compare features, pros, cons, and pricing to find the best RL software for your AI projects.

Find Trusted Cardiac Hospitals

Compare heart hospitals by city and services — all in one place.

Explore Hospitals