Eearly days of my career, we treated data like a static library—once it was there, it stayed there. But as systems evolved into high-frequency, AI-driven environments, that old way of thinking started to break. Data pipelines became brittle, silos between engineers and analysts grew wider, and “broken dashboards” became a daily headache.

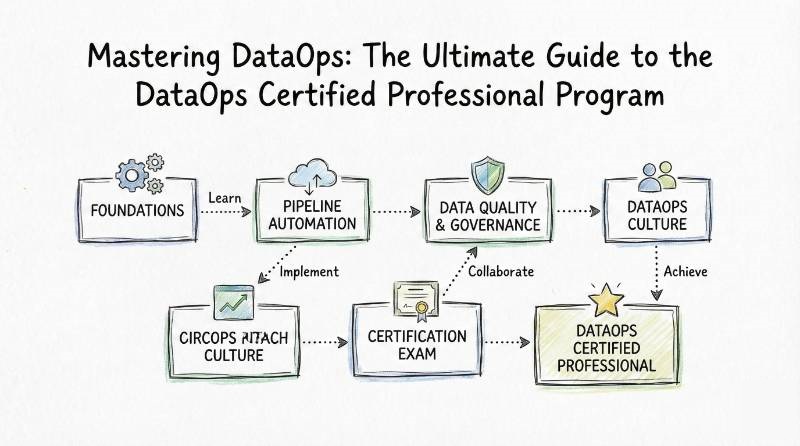

This is where DataOps changes the game. It isn’t just a buzzword; it’s the survival kit for modern software engineers and managers. If you want to move from manual data fire-fighting to automated excellence, the DataOps Certified Professional (DOCP) is the standard that proves you can handle the heat.

What is DataOps?

At its core, DataOps is the application of DevOps principles—like automation, CI/CD, and monitoring—to the world of data. It brings together people, processes, and technology to ensure that data flows from source to consumer quickly, accurately, and securely. Instead of waiting weeks for a new data set, DataOps allows teams to deliver value in hours or days.

DataOps Certified Professional (DOCP)

The DataOps Certified Professional (DOCP) is a specialized program designed to validate your expertise in building, managing, and securing automated data pipelines. It is the gold standard for those who want to bridge the gap between “Big Data” and “Reliable Operations.”

What it is

The DOCP is a professional-level certification that focuses on the end-to-end lifecycle of data. It goes beyond simple SQL or ETL; it teaches you how to orchestrate complex workflows, implement “Data-as-Code,” and ensure that every byte of data moving through your system is governed and high-quality.

Who should take it

- Data Engineers: Who want to automate their pipeline deployments.

- Software Engineers: Looking to specialize in data-intensive applications.

- DevOps Engineers: Moving into the data infrastructure space.

- Engineering Managers: Overseeing data platform teams and wanting to understand modern delivery standards.

- Data Architects: Designing scalable, resilient data ecosystems.

Skills you’ll gain

- Pipeline Orchestration: Mastering tools like Apache Airflow and Dagster to manage complex workflows.

- Continuous Data Integration: Implementing CI/CD for data pipelines and transformation models.

- Data Observability: Setting up real-time monitoring and alerting for data drift and pipeline health.

- Infrastructure as Code (IaC): Using Terraform and Kubernetes to deploy data environments.

- Data Governance & Security: Automating access controls, lineage tracing, and compliance checks (GDPR/HIPAA).

- Agile Data Management: Applying Scrum and Kanban to data engineering tasks.

Real-world projects you should be able to do

- Automated ELT Pipeline: Build a self-healing pipeline that ingests raw data, transforms it using dbt, and loads it into a warehouse with zero manual intervention.

- Observability Dashboard: Create a Grafana/Prometheus dashboard that tracks data freshness and accuracy SLAs in real-time.

- Multi-Cloud Data Mesh: Deploy a containerized data platform across AWS and Azure using Kubernetes.

- Compliance Automation: Set up automated “data masking” and lineage reports for sensitive customer datasets.

Preparation Plan

| Duration | Focus Area | Effort Level |

| 7–14 Days | The Expert Sprint: Focused solely on the DataOps Manifesto and hands-on tool integration for those already in SRE or DevOps roles. | High Intensity |

| 30 Days | The Professional Path: 1-2 hours daily. Ideal for working engineers. Focuses on 50% theory and 50% lab work. | Balanced |

| 60 Days | The Deep Dive: For beginners or managers transitioning from non-technical roles. Includes fundamental Linux and Cloud labs. | Gradual |

Common Mistakes

- Ignoring Data Quality: Focusing only on the speed of the pipeline while letting “garbage” data flow through.

- Manual Overrides: Falling back into the habit of manual SQL fixes instead of fixing the underlying code in Git.

- Tool Obsession: Learning the tool (like Airflow) without understanding the DataOps philosophy behind it.

- Lack of Collaboration: Building a perfect pipeline in a silo without talking to the data scientists who actually use the output.

Best Next Certification after this

After mastering DataOps, the most logical step is to move toward MLOps Certified Professional or SRE Certified Professional to further specialize in model delivery or system reliability.

Master Certification Summary Table

| Track | Level | Who it’s for | Prerequisites | Skills Covered | Recommended Order |

| DataOps | Professional | Engineers & Managers | Basic SQL, Git, Linux | Orchestration, CI/CD, Observability | 1st (Start Here) |

| DevOps | Foundation | Software Engineers | Coding basics | Jenkins, Docker, Git | Pre-requisite |

| DevSecOps | Professional | Security/Cloud Engineers | DevOps Basics | Security Gates, Vault, Compliance | After DataOps |

| SRE | Practitioner | Operations/SREs | Linux, Monitoring | Error Budgets, Incident Response | After DataOps |

| FinOps | Associate | Managers/Architects | Cloud usage basics | Cost Optimization, Billing | Parallel |

Choose Your Path: 6 Specialized Learning Tracks

Success in the modern cloud landscape requires a specific focus. Depending on your career goals, you can follow one of these specialized paths:

The DevOps Path: The “Generalist” path. You focus on software delivery. It is the best place to start for any traditional developer.

The DevSecOps Path: The “Protector” path. You ensure that as the data and code move faster, they remain secure and compliant with global laws.

The SRE Path: The “Stabilizer” path. Your job is to make sure the platform never goes down. You manage “Error Budgets” and “Site Reliability.”

The AIOps/MLOps Path: The “Innovator” path. You manage the lifecycle of Artificial Intelligence. This is currently one of the highest-paying tracks in tech.

The DataOps Path: The “Streamliner” path. You focus on the data factory. This is the bridge between the database and the business value.

The FinOps Path: The “Economist” path. You help the company understand where their cloud money is going and how to get more value out of every dollar spent.

Role → Recommended Certifications Mapping

| Your Current Role | Recommended Certification Journey |

| Software Engineer | DevOps Professional → DOCP → MLOps Professional |

| Data Engineer | DOCP → MLOps Professional → Cloud Data Architect |

| SRE / Ops | SRE Practitioner → DOCP → Observability Specialist |

| Security Engineer | DevSecOps Professional → DOCP (for Data Security) |

| Cloud Engineer | Cloud Architect → FinOps Practitioner → DOCP |

| Platform Engineer | Kubernetes Specialist → DOCP → SRE Professional |

| FinOps Practitioner | FinOps Foundation → Cloud Cost Specialist → DOCP |

| Engineering Manager | DevOps Leader → FinOps for Managers → DOCP |

Training & Certification Support Institutions

If you are looking for structured help, these institutions are the leaders in providing hands-on training for the DataOps Certified Professional (DOCP).

DevOpsSchool: This is the primary authority for DOCP training. They offer an immersive, lab-heavy curriculum led by active industry practitioners. Their support includes lifetime access to course materials and a dedicated technical community for troubleshooting real-world issues.

Cotocus: A premier consulting and training organization that specializes in high-end technical transformations. They focus on corporate training, helping entire teams transition from legacy data systems to modern DataOps workflows with custom-tailored projects.

Scmgalaxy: A massive community hub and training provider that has been at the forefront of the DevOps movement for years. They offer a deep library of technical resources and hands-on bootcamps specifically for data and configuration management.

BestDevOps: Known for their “Certification Success” guarantee, they provide intensive preparation courses. Their focus is on the practical exam, ensuring students can pass the technical challenges required for the DOCP.

devsecopsschool: Specialized in the intersection of security and operations, providing the “Security layer” for DataOps practitioners.

sreschool: The go-to place for learning high-availability and reliability principles that support massive data infrastructures.

aiopsschool: A dedicated training center for those looking to advance from DataOps into the world of AI-driven operations and machine learning.

dataopsschool: A niche platform focusing exclusively on the DataOps lifecycle, providing the deepest possible technical dive into data orchestration tools.

finopsschool: Provides the financial and cloud-economic training necessary to ensure that your DataOps pipelines are cost-effective as they scale.

Next Certifications to Take

Once you have earned your DataOps Certified Professional credential, you shouldn’t stop. The tech world moves fast. Based on industry trends, here are your three best options for growth:

Same Track: MLOps Certified Professional. As you build better data pipelines, the company will want to run Machine Learning on that data. Mastering MLOps makes you indispensable.

Cross-Track: SRE Certified Professional. A data pipeline is only useful if it is “up.” Learning SRE principles helps you build self-healing systems that don’t wake you up at 3:00 AM.

Leadership: Certified DevOps Leader. If you want to move into management (VP or CTO level), you need to learn how to change company culture, not just company code.

FAQs: General Career & Certification

1. How difficult is the DOCP exam? It is moderately difficult because it is hands-on. You aren’t just memorizing definitions; you are proving you can troubleshoot a broken pipeline.

2. How much time do I need to invest? For a working professional, 30 days at 1 hour a day is usually the “sweet spot.”

3. What are the prerequisites? You should have a basic understanding of SQL, Git (version control), and some familiarity with Linux command lines.

4. Can a manager take this course? Absolutely. It provides the “technical vocabulary” and strategic framework needed to lead high-performing data teams.

5. What is the sequence I should follow? Start with DevOps Foundations if you are new to automation, then dive into the DOCP.

6. Does this certification help with salary? Yes. In both India and global markets, “DataOps” roles often command a 20-30% premium over traditional Data Engineering roles.

7. Is the certification globally recognized? Yes, the certification from DevOpsSchool is recognized by major tech hubs from Bangalore to Silicon Valley.

8. What is the value of this over a free course? Official certification includes lab environments, real-world project validation, and a verifiable ID that proves your skills to recruiters.

9. How does DataOps differ from DevOps? DevOps focuses on software code; DataOps focuses on data flows and data quality.

10. Do I need to be a coder to pass? You need to be comfortable with scripting (Python/YAML) and SQL. You don’t need to be a “Lead Developer.”

11. Is there a renewal process? Most professional certifications suggest a refresher or moving to an “Architect” level every 2 years.

12. What are the career outcomes? Graduates often move into roles like DataOps Engineer, Senior Data Engineer, Platform Architect, or Data Operations Manager.

FAQs: DataOps Certified Professional

1. What tools are covered in the DOCP? You will gain hands-on experience with Airflow, Kafka, dbt, Docker, Kubernetes, and monitoring tools like Prometheus.

2. Does the course cover Cloud platforms? Yes, it covers implementations across AWS, Azure, and Google Cloud, focusing on cloud-native data architectures.

3. Is there a final project? Yes, to earn the professional designation, you must complete a capstone project involving a fully automated end-to-end data pipeline.

4. Can I take the exam online? Yes, the exam is available through the official portal and is proctored to maintain high standards.

5. What if I fail the first attempt? Most programs allow for a retake after a short cooling-off period, though the first attempt success rate is high with the recommended preparation.

6. Is the training instructor-led or self-paced? DevOpsSchool offers both—a 5-day intensive instructor-led bootcamp and a flexible self-paced track.

7. Does it cover Data Governance? Yes, a major module is dedicated to automating security, compliance, and data lineage.

8. How do I verify my certificate? Every certificate comes with a unique URL and ID that can be shared on LinkedIn or verified directly on the DevOpsSchool portal.

Conclusion

We are living in the “Age of Data,” but most companies are still struggling with “Data Chaos.” By becoming a DataOps Certified Professional, you are positioning yourself as the person who can bring order to that chaos. Whether you are an engineer looking to boost your salary or a manager looking to stabilize your team’s delivery, DataOps is the answer.

Don’t just build pipelines; build a data factory that is fast, secure, and reliable. The future of software engineering is data-driven, and with the right certification, you can lead that charge.

Find Trusted Cardiac Hospitals

Compare heart hospitals by city and services — all in one place.

Explore Hospitals

I like how you broke down the DataOps Certified Professional program — it makes the path feel much clearer and less intimidating. DataOps is such an important practice for teams dealing with data workflows, and this guide gives solid insights into what skills really matter.