Introduction

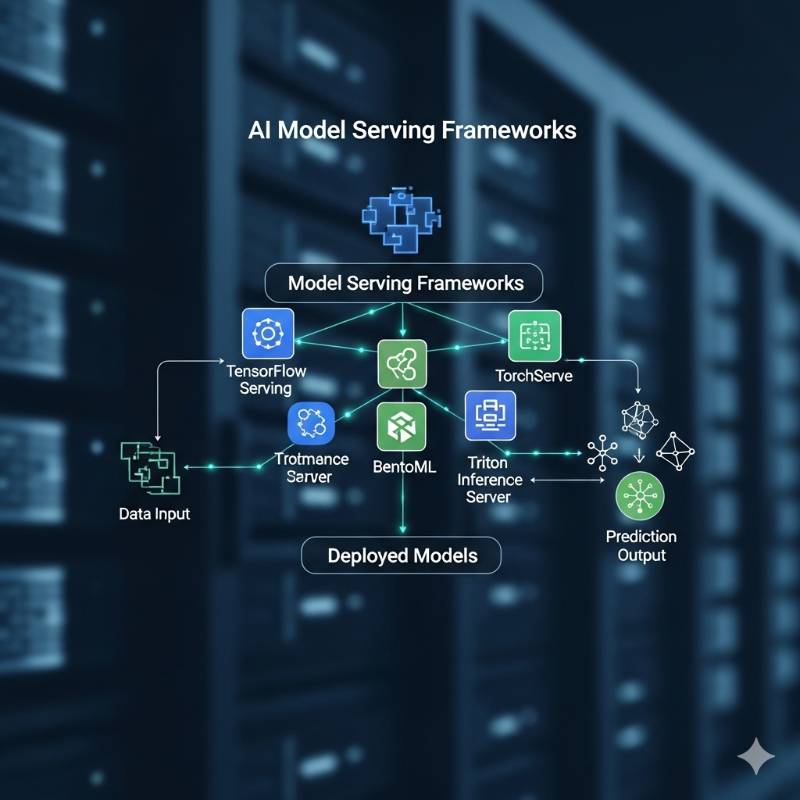

As artificial intelligence continues to move from research labs into real-world applications, AI model serving frameworks have become critical infrastructure for businesses in 2026. These tools allow organizations to deploy, manage, and scale machine learning (ML) and deep learning (DL) models efficiently. From enabling real-time inference in financial services to powering recommendation engines in e-commerce, model serving frameworks bridge the gap between training and production.

When evaluating AI model serving frameworks, decision-makers should consider factors such as scalability, latency, hardware support (CPUs, GPUs, TPUs), integration with MLOps pipelines, monitoring capabilities, and pricing. With so many options available, it can be challenging to choose the right one. In this guide, we review the top 10 AI model serving frameworks tools in 2026, highlighting their features, pros, cons, and ideal use cases.

Top 10 AI Model Serving Frameworks Tools in 2026

1. TensorFlow Serving

Description: TensorFlow Serving is a production-grade serving system developed by Google for deploying ML models. It’s widely used by enterprises needing tight TensorFlow integration.

Key Features:

- Native support for TensorFlow models

- High-performance gRPC and REST APIs

- Model versioning with hot swapping

- GPU acceleration support

- Extensible with custom servables

Pros:

- Mature and widely adopted in production

- Strong integration with TensorFlow ecosystem

- Reliable performance for large-scale deployments

Cons:

- Limited support for non-TensorFlow models

- Steeper learning curve for beginners

2. TorchServe

Description: Developed by AWS and Meta, TorchServe is the official model serving tool for PyTorch, making it a go-to choice for deep learning practitioners.

Key Features:

- Native PyTorch model support

- Multi-model serving

- Model version management

- REST and gRPC APIs

- Metrics integration with Prometheus

Pros:

- Excellent PyTorch integration

- Supports multi-model workflows

- Strong community and AWS backing

Cons:

- Limited support for non-PyTorch models

- Slightly higher latency than TensorFlow Serving in some benchmarks

3. NVIDIA Triton Inference Server

Description: Triton is an open-source inference server optimized for GPUs, widely used in enterprises that require high-performance AI serving across frameworks.

Key Features:

- Supports TensorFlow, PyTorch, ONNX, XGBoost, and more

- Multi-GPU and multi-node support

- Dynamic batching for higher throughput

- Model ensemble support

- Kubernetes integration

Pros:

- Exceptional GPU optimization

- Framework-agnostic

- High throughput with low latency

Cons:

- Complex configuration for beginners

- Requires significant hardware investment

4. BentoML

Description: BentoML is a flexible, developer-friendly framework for packaging and deploying models as microservices.

Key Features:

- Simple Python API for model packaging

- Supports TensorFlow, PyTorch, Scikit-learn, and more

- Docker-native deployment

- Integration with CI/CD pipelines

- Model registry with versioning

Pros:

- Easy to use and developer-friendly

- Great for microservice-based ML deployment

- Strong community adoption

Cons:

- Less optimized for extreme scale

- Limited GPU optimization compared to Triton

5. KServe (formerly KFServing)

Description: KServe is a Kubernetes-based model serving platform built for cloud-native deployments.

Key Features:

- Knative-based autoscaling

- Multi-framework support (TensorFlow, PyTorch, XGBoost, etc.)

- Serverless inference API

- A/B testing and canary rollouts

- Integration with Kubeflow and MLflow

Pros:

- Cloud-native and scalable

- Strong Kubernetes integration

- Built-in support for multi-model deployments

Cons:

- Requires Kubernetes expertise

- Overhead for smaller teams without DevOps resources

6. Seldon Core

Description: Seldon Core is an open-source MLOps framework for deploying, scaling, and monitoring models in Kubernetes.

Key Features:

- Supports 20+ ML frameworks

- Advanced deployment patterns (ensembles, canaries)

- Monitoring with Prometheus and Grafana

- Model explainability integration

- REST/gRPC APIs

Pros:

- Enterprise-ready with robust monitoring

- Flexible deployment strategies

- Open-source with commercial support (Seldon Deploy)

Cons:

- Steeper learning curve

- Kubernetes dependency may deter small teams

7. MLflow Model Serving

Description: Part of the MLflow ecosystem by Databricks, MLflow Model Serving makes it easy to deploy and manage models from MLflow’s model registry.

Key Features:

- Integration with MLflow tracking and registry

- REST API endpoints for deployed models

- Support for multiple ML frameworks

- Logging and metrics integration

- Deployment on Databricks and cloud platforms

Pros:

- Tight integration with ML lifecycle management

- Easy for teams already using MLflow

- Supports multiple frameworks

Cons:

- Limited advanced serving features

- Best suited for Databricks users

8. Ray Serve

Description: Ray Serve is a scalable model serving library built on Ray, ideal for distributed AI applications.

Key Features:

- Scales across clusters with Ray

- Supports batch and real-time inference

- Python-native API

- Multi-model and pipeline serving

- Integrates with Ray ecosystem (RLlib, Tune)

Pros:

- Excellent scalability for distributed AI

- Flexible API for Python developers

- Supports model composition

Cons:

- Ray ecosystem learning curve

- More experimental compared to mature solutions

9. Clipper

Description: Clipper is a low-latency model serving system that supports multiple ML frameworks and provides consistent APIs.

Key Features:

- Supports TensorFlow, PyTorch, Scikit-learn, etc.

- Adaptive batching for throughput optimization

- REST API endpoints

- Framework-agnostic serving

- Built-in caching layer

Pros:

- Simple to use for heterogeneous models

- Focus on low-latency predictions

- Open-source and lightweight

Cons:

- Smaller community and slower updates

- Less feature-rich than newer tools

10. Cortex

Description: Cortex is an open-source platform for deploying models at scale on AWS.

Key Features:

- Serverless inference on AWS

- Supports TensorFlow, PyTorch, Scikit-learn, etc.

- Autoscaling and GPU support

- YAML-based configuration

- Monitoring and logging with CloudWatch

Pros:

- Strong AWS integration

- Serverless scalability

- Easy to use for teams on AWS

Cons:

- Limited support outside AWS

- Less community adoption than Seldon or KServe

Comparison Table: Top 10 AI Model Serving Frameworks in 2026

| Tool | Best For | Platforms Supported | Standout Feature | Pricing | Avg. Rating |

|---|---|---|---|---|---|

| TensorFlow Serving | TensorFlow-heavy teams | Linux, Kubernetes | Native TF model serving | Free | 4.5/5 |

| TorchServe | PyTorch users | Linux, AWS, K8s | Multi-model PyTorch support | Free | 4.4/5 |

| Triton Server | GPU-heavy enterprises | Linux, Kubernetes | Dynamic batching & GPU accel | Free | 4.6/5 |

| BentoML | Startups & dev teams | Docker, K8s | Python-friendly packaging | Free | 4.3/5 |

| KServe | Cloud-native orgs | Kubernetes | Knative-based autoscaling | Free | 4.5/5 |

| Seldon Core | Enterprises on Kubernetes | Kubernetes | Advanced deployment patterns | Free/Custom | 4.4/5 |

| MLflow Serving | Databricks users | Cloud, Databricks | ML lifecycle integration | Free/Custom | 4.3/5 |

| Ray Serve | Distributed AI workloads | Multi-cloud, K8s | Scalable distributed serving | Free | 4.2/5 |

| Clipper | Lightweight deployments | Linux, Docker | Low-latency serving | Free | 4.0/5 |

| Cortex | AWS-centric teams | AWS | Serverless scaling | Free | 4.1/5 |

Which AI Model Serving Framework is Right for You?

Choosing the right framework depends on your team size, infrastructure, and business needs:

- Small teams/startups: BentoML or Clipper for simplicity.

- TensorFlow shops: TensorFlow Serving is the most natural fit.

- PyTorch-heavy projects: TorchServe is optimized for you.

- GPU-intensive workloads: NVIDIA Triton Server provides unmatched performance.

- Enterprises with Kubernetes: Seldon Core or KServe offer scalability and flexibility.

- Databricks users: MLflow Serving integrates seamlessly.

- Distributed AI workloads: Ray Serve enables large-scale distributed serving.

- AWS-first organizations: Cortex fits well with native cloud integration.

Conclusion

In 2026, AI model serving frameworks are no longer optional—they are the backbone of production AI systems. From startups deploying their first model to global enterprises scaling thousands of models, the right serving framework can determine performance, cost efficiency, and ease of operations.

The landscape continues to evolve, with greater emphasis on cloud-native, distributed, and GPU-optimized serving. Teams should test multiple tools via demos or free trials before committing, ensuring alignment with their infrastructure and workflows.

FAQs

1. What is an AI model serving framework?

It’s a system that allows ML models to be deployed into production, providing APIs for real-time or batch predictions.

2. Do I need Kubernetes for model serving?

Not always. Tools like BentoML and Clipper work without Kubernetes, while KServe and Seldon Core are Kubernetes-native.

3. Which framework is best for GPUs?

NVIDIA Triton Inference Server is the most optimized for GPU-based workloads.

4. Can I serve multiple models with one framework?

Yes. Tools like TorchServe, KServe, and Seldon Core support multi-model deployments.

5. What’s the easiest framework for beginners?

BentoML is considered one of the most beginner-friendly options.

Meta Description

Discover the Top 10 AI Model Serving Frameworks tools in 2026. Compare features, pros, cons, pricing & ratings to choose the best solution for your ML deployment.

Find Trusted Cardiac Hospitals

Compare heart hospitals by city and services — all in one place.

Explore Hospitals