Why Zero Downtime Matters for Revenue and Rankings

Imagine a customer about to complete a big purchase when your online store suddenly goes offline; that sale is lost, and your customer’s trust is damaged. Upheaval rooted in downtime redirects prospective buyers to rival sites, while a brief interruption jeopardizes the search rankings you’ve labored to build. Within the e-commerce sphere, each minute offline equates to deserted shopping carts and declining income. The appeal of a zero-downtime migration is that you can upgrade or re-platform your store with no perceptible outage to users. In this article, we’ll show how Blue-Green deployments and Canary releases put that promise into practice.

What “Zero Downtime” Really Means for an Online Store

“Zero downtime” means customers never notice any service interruption during a migration or deployment. Every step of the user journey, browsing products, adding to cart, and checkout, continues normally with no errors or maintenance pages. In practice, that requires all components of the system (and any third-party services) to remain operational throughout the release. The standard to aim for is 100% availability with no perceived outage at all.

Release Patterns Overview: Blue-Green vs. Canary

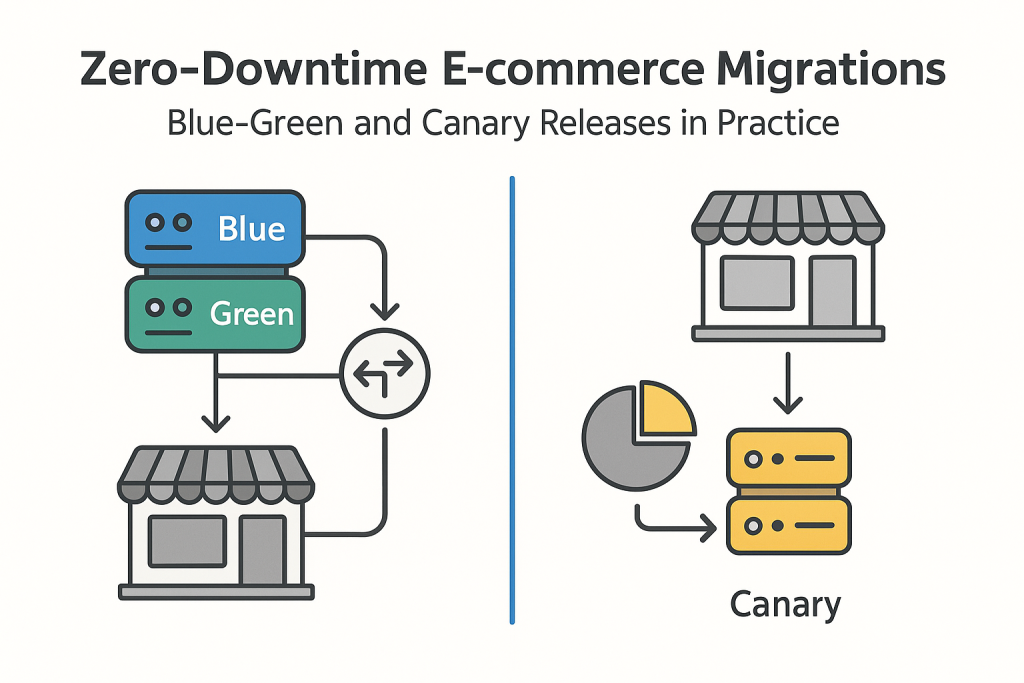

When planning a deployment with zero downtime, two common strategies are Blue-Green and Canary releases. Each has its strengths:

- Blue-Green Deployment: Maintain two production environments, Blue (current live) and Green (new version). Users stay on Blue while you prepare and test Green. Once the new version is ready, flip all traffic to Green in one switch. If something goes wrong, you can immediately switch back to Blue, minimizing disruption.

- Canary Release: Roll out the new version to a small subset of users first (e.g., 1% of traffic), then gradually increase to 5%, 20%, 50%, etc., until 100%. This gradual approach lets you catch issues on a small sample before they impact everyone. Feature flags and progressive delivery tools are often used to control the rollout. Canary releases are ideal for high-traffic or high-risk updates, since if any serious issue is detected, you can quickly rollback with minimal user impact.

The Database Layer: Principles of Zero Downtime Migration

Database migrations must retain backward compatibility at all phases. Employ an “expand and contract” model: introduce new tables or columns while preserving the existing schema, redirect the application to the new structures, and subsequently retire the old components only after verifying stability in all application versions. This sequence permits the simultaneous execution of legacy and modern application code. When schema modifications are substantial, use online schema change utilities to operate on an in-memory shadow table and atomically swap the active reference, thus avoiding global locks.

Throughout the window, the application may have to replicate writes to both the deprecated and the newly provisioned fields to prevent data drift. Schedule comprehensive data validation and delay removal of the legacy structures until automated tests and monitored metrics confirm operational parity. Retaining a snapshot of the source schema enables immediate reversal of the migration should undiscovered issues emerge. The principal engineering advantage of a zero-downtime migration is the ability to upgrade or re-platform the active transactional store without measurable disruption to the end user.

From Strategy to Execution: Crafting a Migration Plan

Successful zero-downtime migration derives from methodical preparation. Conduct a comprehensive risk assessment to identify potential failure cases, then specify unambiguous rollback procedures corresponding to each scenario. Schedule the cutover during a low-traffic window and notify stakeholders in advance. (If SEO is a concern, preserve URLs or use proper redirects to maintain search rankings.) Many businesses enlist professional ecommerce store migration services for expert support on such high-stakes projects.

Blue-Green in Practice for Storefront + Database

Implementing a Blue-Green deployment involves:

- Preparing a Green environment that mirrors production (Blue) and keeping its data synchronized (via replication or dual writes).

- Switching traffic over to Green via the load balancer or DNS once it’s ready. Use session stickiness and warm up caches on Green beforehand, ensuring users see no disruption.

- Monitor post-cutover KPIs such as authentication rate, checkout conversion, and error budgets to confirm system health.

- Roll back instantly to Blue if issues arise, then perform data reconciliation to maintain database integrity before resuming migration.

Canary Releases Step-by-Step

A canary deployment rolls out changes to users gradually:

- Segment rollout by percentage of users, specific geographies, or device types, and control access with feature flags.

- Monitor health metrics continuously, including error rates, latency, and key business KPIs, with automated halt thresholds.

- Ramp up gradually: 1% → 5% → 25% → 50% → 100% of traffic.

- Handle schema changes using write-compatible and forward-compatible approaches to ensure both old and new code function correctly.

- Favor Canary over Blue-Green when deploying complex UI changes or in environments using a service mesh.

Database Migration to Cloud without Downtime

Transferring an e-commerce database to a cloud architecture without incurring downtime requires pre-provisioned target surroundings. Use cloud-native options such as managed replicas, DMS, or CDC tooling, and perform cross-region rehearsals to validate readiness. Safeguard latency thresholds by situating read replicas proximate to end-user communities. Throughout the dual operations phase, weigh the incremental infrastructure expense against the diminished probability of migration-related failure. Upon the designated cutover, reroute application traffic after the standby replica has fully synchronized, maintaining the legacy environment in a read-only configuration to facilitate quick, reversible rollback.

Observability, Validation, and Fast Rollback

In a zero downtime migration, monitor golden signals, latency, errors, saturation, and business KPIs like AOV and checkout success. Validate with shadow traffic and contract tests. Maintain a one-click rollback playbook with data divergence guardrails, enabling instant restoration if thresholds are breached during deployment.

Common Pitfalls (and How to Avoid Them)

Even with preparation, beware of:

- Incompatible schema changes: Removing or altering a database field that old code still uses will cause failures. Always make changes in backward-compatible phases (add first, remove later).

- Non-idempotent operations: If actions (like sending emails or charging payments) run twice, they can double-execute. Use idempotency keys or checks (e.g., unique transaction IDs) to prevent duplicates.

- Cache issues: Not refreshing caches or session stores can show stale data or log out users after a switch. Warm up caches and ensure session data is shared between old and new systems.

Hands-on Checklist: Zero Downtime Migration Runbook

Cover these steps in your runbook:

- Pre-flight: Back up data, enable feature flags, and set up monitoring.

- Cutover: Freeze any changes, deploy the new version, and switch traffic over.

- Verification: Test core user flows (login, checkout, etc.) and watch metrics closely.

- Rollback: Establish clear failure criteria (e.g., error rate > X%) and be ready to revert if triggered.

- Post-migration: Decommission the old environment and remove any temporary code or feature flags.

Conclusion

Successful zero downtime migrations align your platform’s strengths with your business roadmap, not industry hype. Assess your team’s capabilities early, plan accordingly, and share your migration experiences to help others achieve smooth, disruption-free transitions.

Find Trusted Cardiac Hospitals

Compare heart hospitals by city and services — all in one place.

Explore Hospitals