Here is a quick intro to Automated machine learning

Automated machine learning, also referred to as automated ML or AutoML, is the process of automating the time-consuming, iterative tasks of machine learning model development. It allows data scientists, analysts, and developers to build ML models with high scale, efficiency, and productivity all while sustaining model quality.

Traditional machine learning model development is resource-intensive, requiring significant domain knowledge and time to produce and compare dozens of models. With automated machine learning, you’ll accelerate the time it takes to get production-ready ML models with great ease and efficiency.

Now moving to questions and answers,

1. what is Automated machine learning?

Answer: Automated machine learning (AutoML) is the process of automating the tasks of applying machine learning to real-world problems. AutoML potentially includes every stage from beginning with a raw dataset to building a machine learning model ready for deployment. AutoML was proposed as an artificial intelligence-based solution to the growing challenge of applying machine learning.[1][2] The high degree of automation in AutoML aims to allow non-experts to make use of machine learning models and techniques without requiring them to become experts in machine learning. Automating the process of applying machine learning end-to-end additionally offers the advantages of producing simpler solutions, faster creation of those solutions, and models that often outperform hand-designed models.

2. What are the different types of Machine Learning?

Answer: There are 3 different types of machine learning:

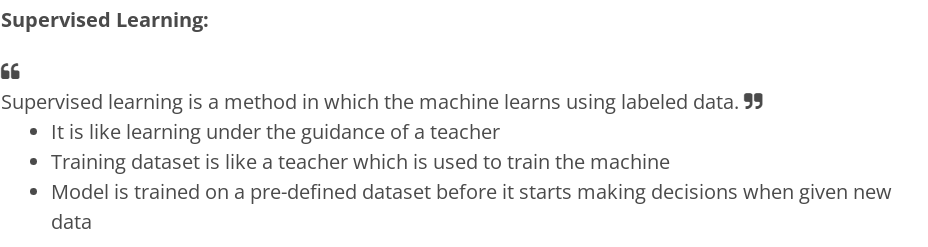

- Supervised Learning

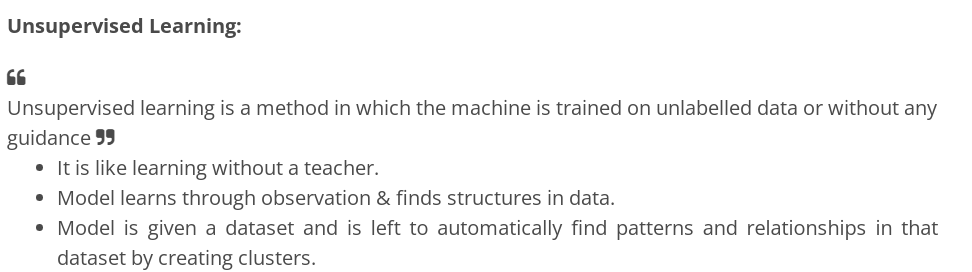

- Unsupervised Learning

- Reinforcement Learning

Answer:

- Suppose your friend invites you to his party where you meet total strangers. Since you have no idea about them, you will mentally classify them on the basis of gender, age group, dressing, etc.

- In this scenario, the strangers represent unlabeled data and the process of classifying unlabeled data points is nothing but unsupervised learning.

- Since you didn’t use any prior knowledge about people and classified them on-the-go, this becomes an unsupervised learning problem.

4. In what manners does Deep Learning differ from Machine Learning?

Answer:

5. What do you understand by Precision and Recall?

Answer:

- Imagine that, your girlfriend gave you a birthday surprise every year for the last 10 years. One day, your girlfriend asks you: ‘Sweetie, do you remember all the birthday surprises from me?’

- To stay on good terms with your girlfriend, you need to recall all the 10 events from your memory.Therefore, recall is the ratio of the number of events you can correctly recall, to the total number of events.

- If you can recall all 10 events correctly, then, your recall ratio is 1.0 (100%) and if you can recall 7 events correctly, your recall ratio is 0.7 (70%)

However, you might be wrong in some answers.

- For example, let’s assume that you took 15 guesses out of which 10 were correct and 5 were wrong. This means that you can recall all events but not so precisely

- Therefore, precision is the ratio of the number of events you can correctly recall, to the total number of events you can recall (mix of correct and wrong recalls).

- From the above example (10 real events, 15 answers: 10 correct, 5 wrong), you get 100% recall but your precision is only 66.67% (10 / 15)

6. What do you understand by selection bias?

Answer:

- It is a statistical error that causes a bias in the sampling portion of an experiment.

- The error causes one sampling group to be selected more often than other groups included in the experiment.

- Selection bias may produce an inaccurate conclusion if the selection bias is not identified.

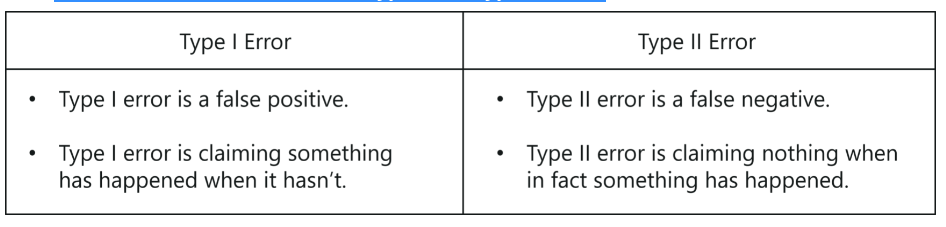

7. What’s the difference between Type I and Type II errors?

Answers:

Answer:

- Gini Impurity and Entropy are the metrics used for deciding how to split a Decision Tree.

- Gini measurement is the probability of a random sample being classified correctly if you randomly pick a label according to the distribution in the branch.

- Entropy is a measurement to calculate the lack of information. You calculate the Information Gain (difference in entropies) by making a split. This measure helps to reduce the uncertainty about the output label.

9. What is the difference between Entropy and Information Gain?

Answer:

- Entropy is an indicator of how messy your data is. It decreases as you reach closer to the leaf node.

- The Information Gain is based on the decrease in entropy after a dataset is split on an attribute. It keeps on increasing as you reach closer to the leaf node.

10. What are collinearity and multicollinearity?

Answer:

- Collinearity occurs when two predictor variables (e.g., x1 and x2) in a multiple regression have some correlation.

- Multicollinearity occurs when more than two predictor variables (e.g., x1, x2, and x3) are inter-correlated.

11. What is Cluster Sampling?

Answer:

- It is a process of randomly selecting intact groups within a defined population, sharing similar characteristics.

- A cluster Sample is a probability sample where each sampling unit is a collection or cluster of elements.

- For example, if you’re clustering the total number of managers in a set of companies, in that case, managers (samples) will represent elements and companies will represent clusters.

12. What is the difference between Data Mining and Machine Learning?

Answer:

Data mining can be described as the process in which the structured data tries to abstract knowledge or interesting unknown patterns. During this process, machine learning algorithms are used.

Machine learning represents the study, design, and development of the algorithms which provide the ability to the processors to learn without being explicitly programmed.

13. What is the meaning of Overfitting in Machine learning?

Answer: Overfitting can be seen in machine learning when a statistical model describes random error or noise instead of the underlying relationship. Overfitting is usually observed when a model is excessively complex. It happens because of having too many parameters concerning the number of training data types. The model displays poor performance, which has been overfitted.

14. Why does overfitting occur?

Answer: The possibility of overfitting occurs when the criteria used for training the model is not as per the criteria used to judge the efficiency of a model.

15. What is the method to avoid overfitting?

Answer: Overfitting occurs when we have a small dataset, and a model is trying to learn from it. By using a large amount of data, overfitting can be avoided. But if we have a small database and are forced to build a model based on that, then we can use a technique known as cross-validation. In this method, a model is usually given a dataset of known data on which the training data set is run and a dataset of unknown data against which the model is tested. The primary aim of cross-validation is to define a dataset to “test” the model in the training phase. If there is sufficient data, ‘Isotonic Regression’ is used to prevent overfitting.

16. How is KNN different from k-means?

Answer: KNN or K nearest neighbors is a supervised algorithm that is used for classification purposes. In KNN, a test sample is given as the class of the majority of its nearest neighbors. On the other side, K-means is an unsupervised algorithm that is mainly used for clustering. In k-means clustering, it needs a set of unlabeled points and a threshold only. The algorithm further takes unlabeled data and learns how to cluster it into groups by computing the mean of the distance between different unlabeled points.

17. What are the different types of Algorithm methods in Machine Learning?

Answer:

18. What do you understand by the Reinforcement Learning technique?

Answer: Reinforcement learning is an algorithm technique used in Machine Learning. It involves an agent that interacts with its environment by producing actions & discovering errors or rewards. Reinforcement learning is employed by different software and machines to search for the best suitable behavior or path it should follow in a specific situation. It usually learns on the basis of reward or penalty given for every action it performs.

19. What is the trade-off between bias and variance?

Answer: Both bias and variance are errors. Bias is an error due to erroneous or overly simplistic assumptions in the learning algorithm. It can lead to the model under-fitting the data, making it hard to have high predictive accuracy and generalize the knowledge from the training set to the test set.

Variance is an error due to too much complexity in the learning algorithm. It leads to the algorithm being highly sensitive to high degrees of variation in the training data, which can lead the model to overfit the data.

To optimally reduce the number of errors, we will need to tradeoff bias and variance.

20. What do you mean by ensemble learning?

Answer: Numerous models, such as classifiers are strategically made and combined to solve a specific computational program which is known as ensemble learning. The ensemble methods are also known as committee-based learning or learning multiple classifier systems. It trains various hypotheses to fix the same issue. One of the most suitable examples of ensemble modeling is the random forest trees where several decision trees are used to predict outcomes. It is used to improve the classification, function approximation, prediction, etc. of a model.

21. What is a model selection in Machine Learning?

Answer: The process of choosing models among diverse mathematical models, which are used to define the same data is known as Model Selection. Model learning is applied to the fields of statistics, data mining, and machine learning.

22. What are the three stages of building the hypotheses or model in machine learning?

Answer:

23. Describe ‘Training set’ and ‘training Test’.

Answer: In various areas of information of machine learning, a set of data is used to discover the potentially predictive relationship, which is known as ‘Training Set’. The training set is an example that is given to the learner. Besides, the ‘Test set’ is used to test the accuracy of the hypotheses generated by the learner. It is the set of instances held back from the learner. Thus, the training set is distinct from the test set.

24. What do you understand by ILP?

Answer: ILP stands for Inductive Logic Programming. It is a part of machine learning which uses logic programming. It aims at searching patterns in data that can be used to build predictive models. In this process, the logic programs are assumed as a hypothesis.

25. Describe Precision and Recall?

Answer:

Precision and Recall both are the measures that are used in the information retrieval domain to measure how well an information retrieval system reclaims the related data as requested by the user.

Precision can be said as a positive predictive value. It is the fraction of relevant instances among the received instances.

On the other side, recall is the fraction of relevant instances that have been retrieved over the total amount of relevant instances. The recall is also known as sensitivity.

26. What are the functions of Supervised Learning?

Answer:

- Classification

- Speech Recognition

- Regression

- Predict Time Series

- Annotate Strings

27. What do you understand by algorithm-independent machine learning?

Answer: Algorithm independent machine learning can be defined as machine learning, where mathematical foundations are independent of any particular classifier or learning algorithm.

28. What are the functions of Unsupervised Learning?

Answer:

29. What do you mean by Genetic Programming?

Answer: Genetic Programming (GP) is almost similar to an Evolutionary Algorithm, a subset of machine learning. Genetic programming software systems implement an algorithm that uses random mutation, a fitness function, crossover, and multiple generations of evolution to resolve a user-defined task. The genetic programming model is based on testing and choosing the best option among a set of results.

30. Explain True Positive, True Negative, False Positive, and False Negative in Confusion Matrix with an example.

Answer:

30. What is Bagging and Boosting?

Answer:

- Bagging is a process in ensemble learning which is used for improving unstable estimation or classification schemes.

- Boosting methods are used sequentially to reduce the bias of the combined model.

31. Which are the two components of the Bayesian logic program?

Answer: A Bayesian logic program consists of two components:

Logical

*It contains a set of Bayesian Clauses, which capture the qualitative structure of the domain.

Quantitative

*It is used to encode quantitative information about the domain.

32. Describe dimension reduction in machine learning.

Answer:

- Dimension reduction is the process that is used to reduce the number of random variables under consideration.

- Dimension reduction can be divided into feature selection and extraction.

33. Why instance-based learning algorithm sometimes referred to as a Lazy learning algorithm?

Answer: In machine learning, lazy learning can be described as a method where induction and generalization processes are delayed until classification is performed. Because of the same property, an instance-based learning algorithm is sometimes called a lazy learning algorithm.

34. What are the Recommended Systems?

Answer: Recommended System is a sub-directory of information filtering systems. It predicts the preferences or rankings offered by a user to a product. According to the preferences, it provides similar recommendations to a user. Recommendation systems are widely used in movies, news, research articles, products, social tips, music, etc.

35. What do you understand by Underfitting?

Answer: Underfitting is an issue when we have a low error in both the training set and the testing set. Few algorithms work better for interpretations but fail for better predictions.

36. What is Regularization? What kind of problems does regularization solve?

Answer:

Regularization is a form of regression, which constrains/ regularizes or shrinks the coefficient estimates towards zero. In other words, it discourages learning a more complex or flexible model to avoid the risk of overfitting. It reduces the variance of the model, without a substantial increase in its bias.

Regularization is used to address overfitting problems as it penalizes the loss function by adding a multiple of an L1 (LASSO) or an L2 (Ridge) norm of weights vector w.

37. Do you think that treating a categorical variable as a continuous variable would result in a better predictive model?

Answer: For a better predictive model, the categorical variable can be considered as a continuous variable only when the variable is ordinal in nature.

38. What do you understand by the F1 score?

Answer: The F1 score represents the measurement of a model’s performance. It is referred to as a weighted average of the precision and recall of a model. The results tending to 1 are considered as the best, and those tending to 0 are the worst. It could be used in classification tests, where true negatives don’t matter much.

39. What is the meaning of Variance Error in ML algorithms?

Answer: Variance error is found in machine learning algorithms that are highly complex and pose difficulties in understanding them. As a result, you can find a greater extent of variation in the training data. Subsequently, the machine learning model would overfit the data. In addition, you can also find excessive noise for training data which is completely inappropriate for the test data.

40. What is the ROC curve, and how does it work?

Answer: The receiver Operating Characteristic (ROC) curve provides a pictorial representation of the contrast level between false-positive rates and true positive rates. The estimates of true and false positive rates are taken at multiple thresholds. The ROC is ideal as a proxy for measuring trade-offs and sensitivity associated with a model. According to the measurements of sensitivity and trade-off, the curve can trigger false alarms.

41. What is precision, and what is a recall?

Answer: The recall is the number of true positive rates identified for a specific total number of datasets. Precision involves predictions for positive values claimed by a model as compared to the number of actually claimed positives. You can assume this as a special case for probability with respect to mathematics.

42. What are Naive Bayes?

Answer: Naive Bayes is ideal for practical application in text mining. However, it also involves an assumption that it is not possible to visualize in real-time data. Naive Bayes involves the calculation of conditional probability from the pure product of individual probabilities of different components. The condition in such cases would imply complete independence for the features that are practically not possible or very difficult. Candidates should expect this type of follow-up machine learning interview questions.

43. How is the generative model different from the discriminative model?

Answer: The generative model will review the data categories. However, a discriminative model would review the difference between various data categories. Generally, discriminative models have better performance than generative models in classification tasks.

44. In which situation is classification better than regression?

Answer: Classification results in the generation of discrete values and dataset according to specific categories. On the other hand, regression provides continuous results with better demarcations between individual points. Classification is better than regression when you need the results to reflect the presence of data points in explicit categories in the dataset. Classification is better if you just want to find whether a name is female or male. Regression is ideal if you want to find out the correlation of the name with male and female names.

45. Name the five most popular machine learning algorithms.

Answer: The most popular machine learning algorithms are decision trees, probabilistic networks, and neural networks. The other two popular ML algorithms are support vector machines and neural networks or backpropagation networks.

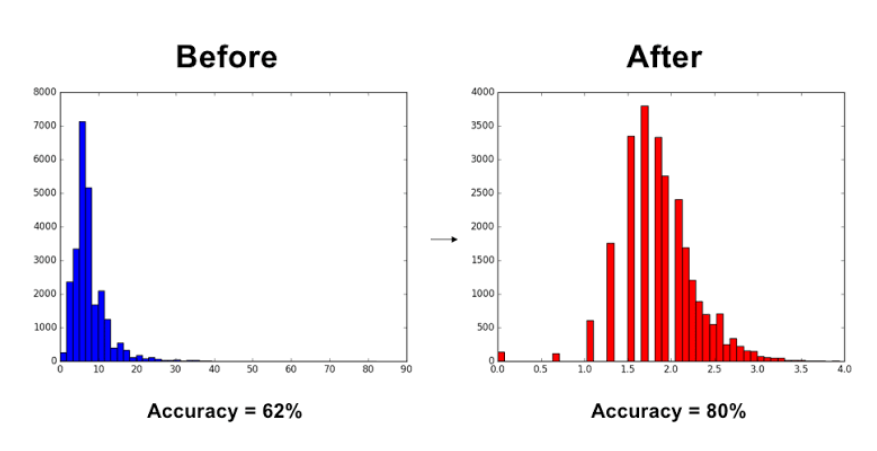

46. What is the use of Box-Cox transformation?

Answer: Box-Cox transformation is a type of powerful transformation that helps in the transformation of data for normalizing distribution. Box-Cox transformation is also ideal for stabilizing variance by eliminating heteroskedasticity.

47. What is the difference between inductive and deductive learning?

Answer: Inductive learning involves using observations for reaching conclusions. Deductive learning involves referring to conclusions for developing observations.

48. What are the different components of relational evaluation techniques?

Answer: The significant components of relational evaluation techniques include data acquisition, query type, significance test, and scoring metric. The other important components include a cross-validation technique and ground truth acquisition.

49. What is a recommendation system?

Answer: Answer: A recommendation system is a subclass in the information filtering system for predicting preference that a user would assign to an item. The best techniques for recommendation systems are collaborative filtering and content-based filtering.

50. Can you explain about bagging?

Answer: Bagging is the short-form for bootstrap aggregating. Bagging is actually a meta-algorithm that takes M subsamples from the initial dataset as inputs. Subsequently, the algorithm trains a predictive model on the subsamples. The final model is a product of averaging bootstrapped models and provides better results.

- Apache Lucene Query Example - April 8, 2024

- Google Cloud: Step by Step Tutorials for setting up Multi-cluster Ingress (MCI) - April 7, 2024

- What is Multi-cluster Ingress (MCI) - April 7, 2024