What is Google Search Engine?

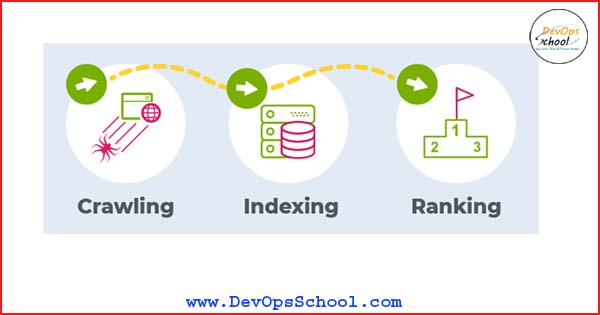

A search engine is a web-based tool that enables users to locate information on the World Wide Web. Popular examples of search engines are Google, Yahoo!, and MSN Search. When the Crawling process search engines send out a team of robots (known as crawlers or spiders) to find new and updated content. that travel along the Web, following links from page to page, site to site. Search engines utilize automated software applications (referred to as robots, bots, or spiders) that travel along the Web, following links from page to page, site to site. The information gathered by the spiders is used to create a searchable index of the Web.

What is the role of Web crawler?

A web crawler helps when someone asks and finds it on the search engine. When Someone finds and searches anything then crawl Web crawlers typically identify themselves to a Web server by using the User-agent field of an HTTP request. They crawl entire websites by following internal links, allowing them to understand how websites are structured, along with the information that they include. Crawling is the technical term for automatically accessing a website and obtaining data via a software program.

Finding information by crawling

The web is like an ever-growing library with billions of books and no central filing system. We use software known as web crawlers to discover publicly available web pages. Crawlers look at web pages and follow links on those pages, much like you would if you were browsing content on the web. They go from link to link and bring data about those web-pages back to Google’s servers.

How can I see what sites are crawling?

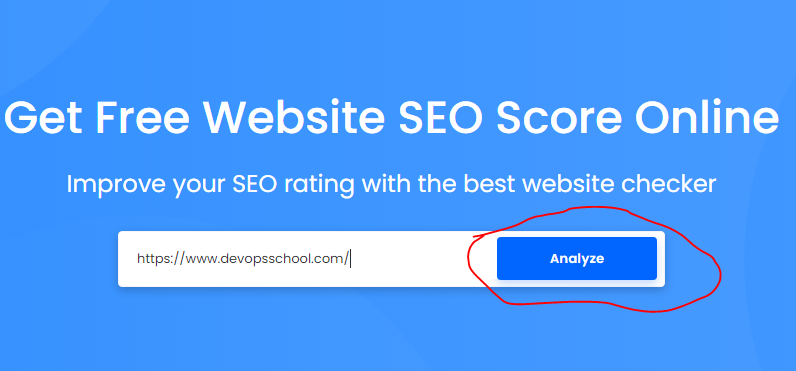

Check our guide on how to crawl a website with Sitechecker.

Enter your domain here https://sitechecker.pro/

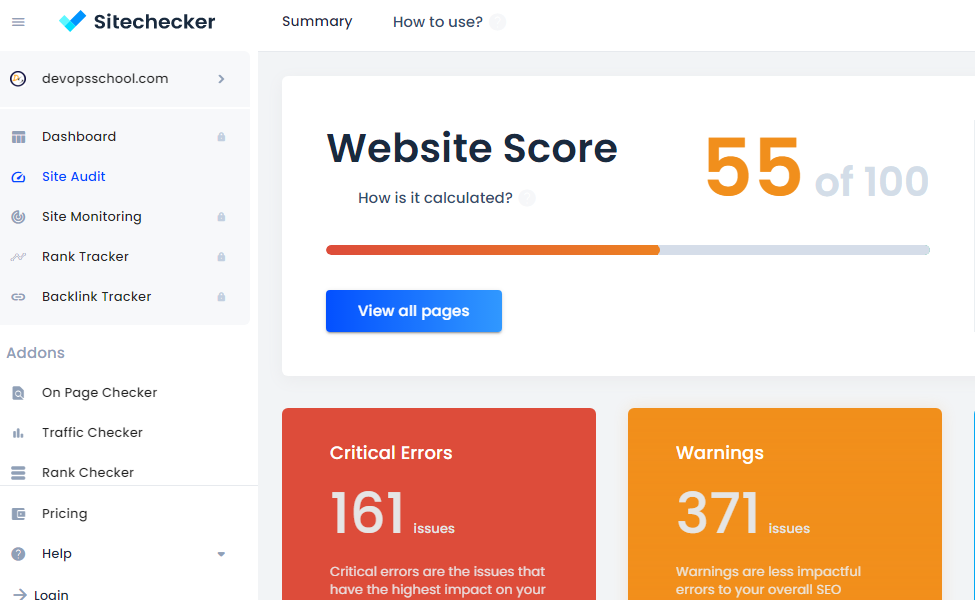

Use advanced settings to specify rules of site crawling.

Watch how site crawler collects data in real time.

And a checkout list of all technical issues Sitecheckerbot has found on the website. Start fixing them step by step from the most critical errors to less important. When you finished fixing issues, recrawl the website to make sure the Website Score is up.

- Apache Lucene Query Example - April 8, 2024

- Google Cloud: Step by Step Tutorials for setting up Multi-cluster Ingress (MCI) - April 7, 2024

- What is Multi-cluster Ingress (MCI) - April 7, 2024